-

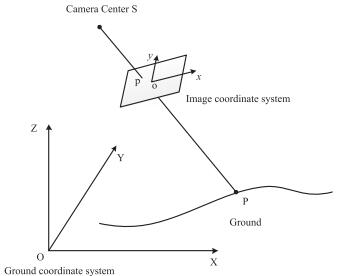

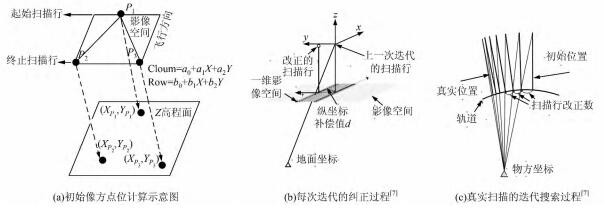

, 万一, 胡堃, 郑茂腾. 光学卫星影像摄影测量处理理论与方法 科学出版社, ISBN: 978-7-03-072801-2, 438P, 2022.08.

-

李彦胜, , 陈瑞贤, 马佳义. 高分辨率遥感影像场景智能理解 科学出版社, ISBN: 978-7-03-071437-4, 120P, 2022.02.

-

. 基于序列图像的视觉检测理论与方法 武汉大学出版社, ISBN: 978-7-307-06654-0, 148P, 2008.12.

- All

- All

- English Journal

- Chinese Journal

-

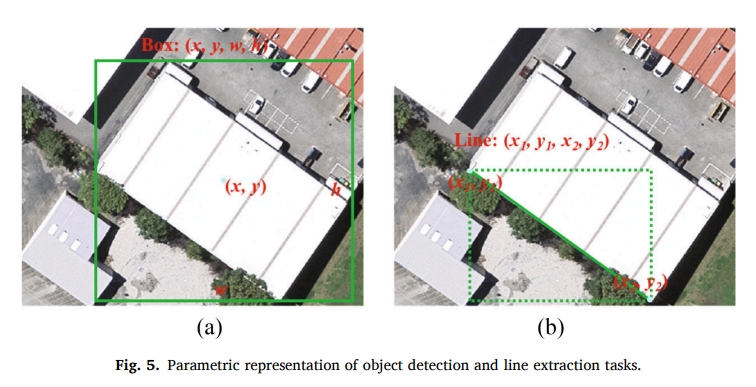

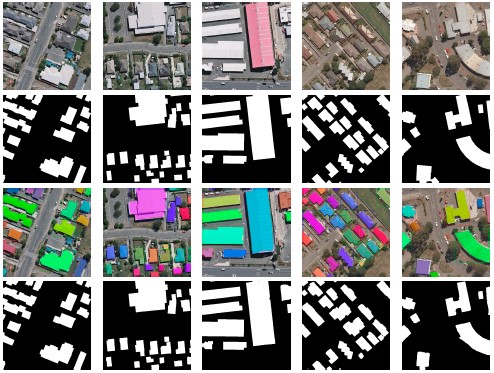

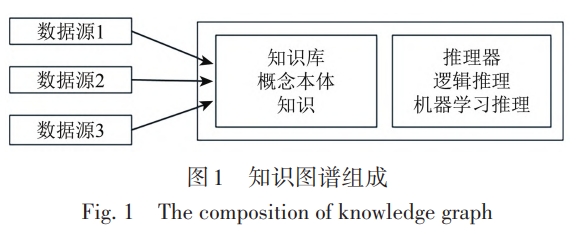

Shiqing Wei, Tao Zhang, Dawen Yu, Shunping Ji, , Jianya Gong. (2024) From Lines to Polygons: Polygonal Building Contour Extraction from High-Resolution Remote Sensing Imagery. In: ISPRS Journal of Photogrammetry and Remote Sensing 209: 213-232.

Abstract: Automated extraction of polygonal building contours from high-resolution remote sensing images is important for various applications. However, it remains a difficult task to achieve automated extraction of polygonal buildings at the level of human delineation due to diverse building structures and imperfect image conditions. In this paper, we propose Line2Poly, an end-to-end approach that uses feature lines as geometric primitives to achieve polygonal building extraction by recovering topological relationships among these lines within an individual building. To extract building feature lines with precision, we adopt a two-stage strategy that combines Convolutional Neural Network (CNN) and transformer architectures. A CNN-based module extracts preliminary feature lines, which serve as positional priors for initializing positional queries in the subsequent transformer-based module. For polygonal building contour reconstruction, we devise a learnable polygon topology reconstruction module that predicts adjacency relationships among discrete lines, and integrates lines into building polygons. The resultant building polygons, based on feature lines, exhibit inherent regularity that aligns with manual labeling standards. Extensive experiments on the Vectorizing World Buildings dataset, the WHU aerial building dataset and the WHU-Mix (vector) dataset validate Line2Poly’s impressive performance in building feature line extraction and instance-level building detection. Moreover, Line2Poly’s predictions exhibit the highest level of concurrence with manual delineations, with over 83% agreement on the WHU aerial building test set and 68.7/59.7% on the WHU-Mix (vector) test set I and II, respectively. [full text] [link]

-

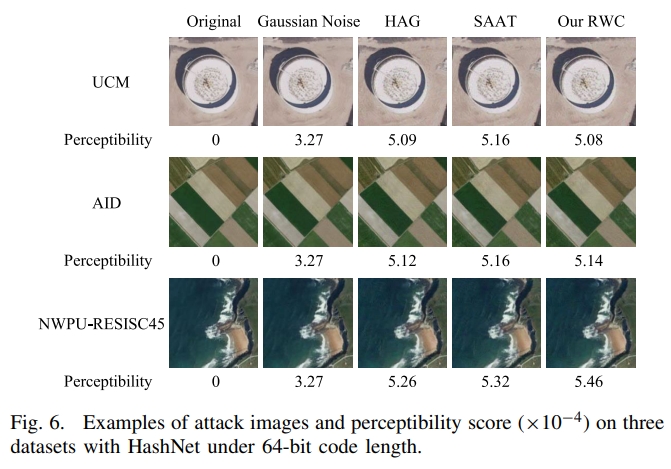

Yansheng Li , Mengze Hao, Rongjie Liu, Zhichao Zhang, Hu Zhu, . (2023) Semantic-Aware Attack and Defense on Deep Hashing Networks for Remote-Sensing Image Retrieval. In: IEEE Transactions on Geoscience and Remote Sensing, 61, 5627214.

Abstract: Deep hashing networks have been successful in retrieving interesting images from massive remote-sensing images. There is no doubt that security and reliability are critical in remote-sensing image retrieval (RSIR). Recent studies about natural image retrieval have shown the vulnerability of deep hashing networks to adversarial examples, but there are no existing research studies about the attack and defense of deep hashing networks in RSIR. Due to the large intraclass difference and high interclass similarity of remote-sensing images, the attack and defense methods on deep hashing networks for natural images cannot be directly applied to the remote-sensing images. Different from the widely adopted instance-aware hash codes that often present the suboptimum performance of the attack and defense on deep hashing networks, this article recommends the usage of semantic-aware hash codes, which take into account multiple samples in the given semantic categories, in both attack and defense. To pursue the strongest attack on RSIR, a novel semantic-aware attack with weights via multiple random initialization (RWC) is proposed. To alleviate the retrieval degradation caused by adversarial attacks, a new adversarial training defense method on deep hashing networks with the adversarial semantic-aware consistency constraint (ACN) is proposed. Extensive experiments on three typical open remote-sensing image datasets (i.e., UCM, AID, and NWPU-RESISC45) show that the proposed attack and defense methods on various deep hashing networks achieve better performance compared with the state-of-the-art methods. The source code will be made publicly available along with this article. [full text] [link]

-

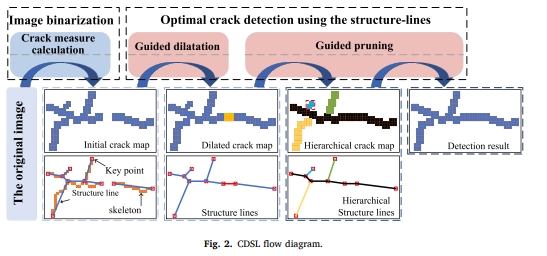

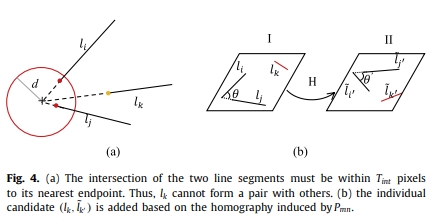

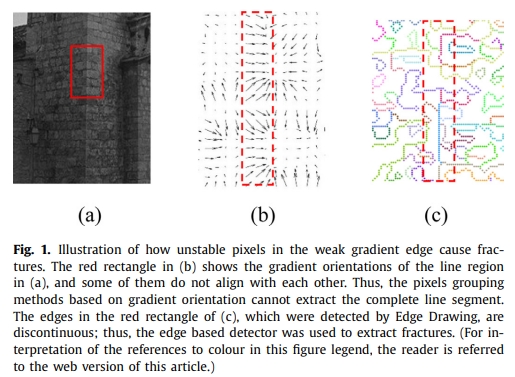

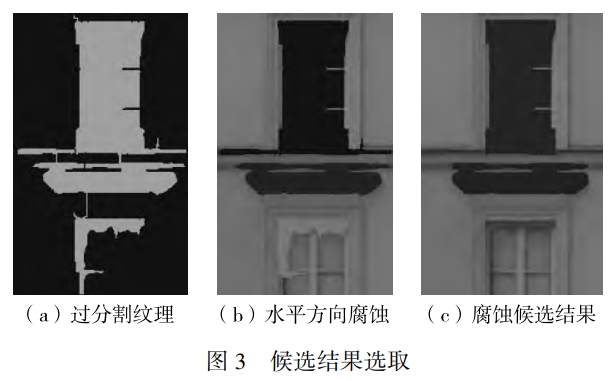

, Xinyi Lu, Yansong Duan, Dong We, Xianzhang Zhu, Bin Zhang, Bohui Pang. (2023) Robust Surface Crack Detection with Structure Line Guidance International. In: Journal of Applied Earth Observation and Geoinformation, 124: 103527.

Abstract: Crack detection plays a pivotal role in civil engineering applications, where vision-based methods find extensive use. In practice, crack images are sourced from Unmanned Aerial Vehicles (UAV) and handheld photography, and the balance between the utilization of global and local information is the key to detecting cracks from images of different sources: the former tends to eliminate interferences with a global perspective, whereas the latter pays more attention to the description of local details of cracks. However, many existing methods primarily target crack detection in handheld photographs and may not perform optimally on UAV-generated images or those with variable backgrounds or from different sources. In response to this challenge, we propose a robust and innovative method called Crack Detection with Structure Line (CDSL). The primary steps of this method can be summarized as follows: first, based on local information, an indicator called the “crack measure” is derived to directly generate a continuous crack map for effective image binarization; then, based on global information, the crack map is simplified in a unified and analyzable form using structure lines to perform a robust optimization for high-precision crack detection. The experiments we conducted on two publicly available datasets showed that CDSL provided competitive crack detection performance and outperformed four classical or current state-of-the-art methods by at least 13.0 % in the UAV dataset we collected. [full text] [link]

-

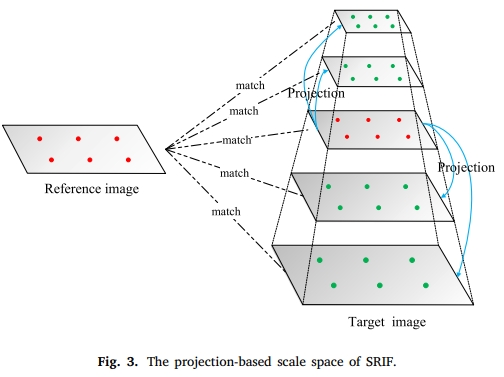

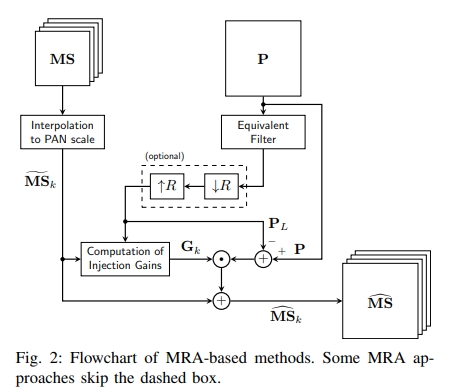

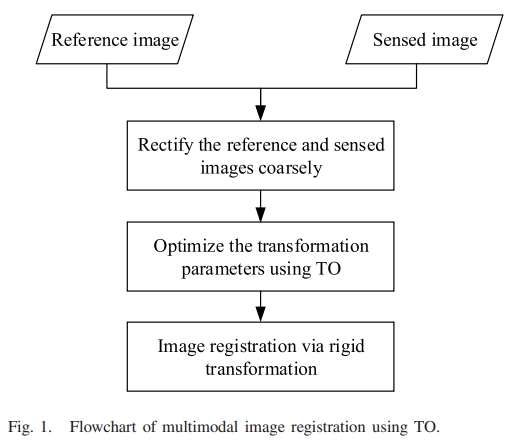

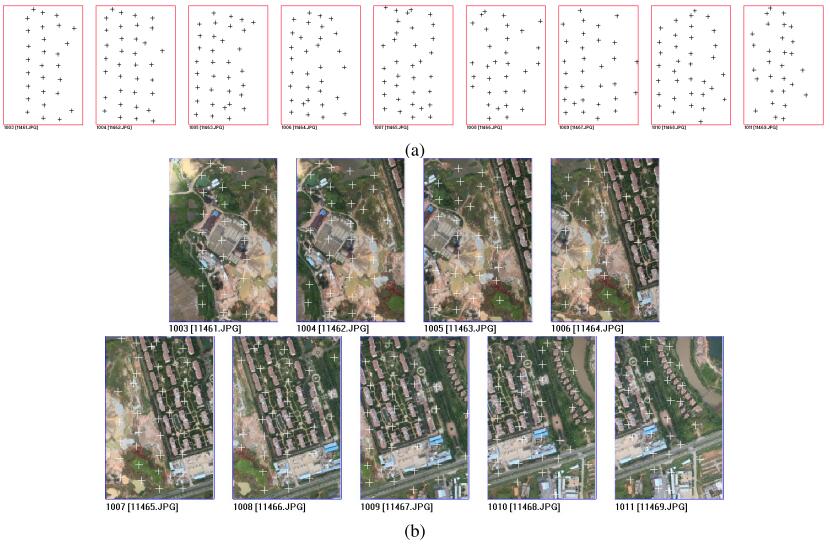

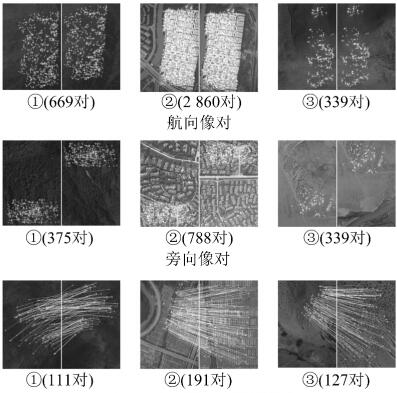

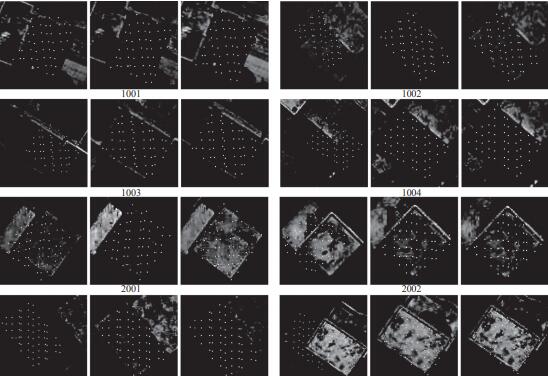

Jiayuan Li, Qingwu Hu, . (2023) Multimodal Image Matching: A Scale-Invariant Algorithm and an Open Dataset. In: ISPRS Journal of Photogrammetry and Remote Sensing 204: 77-88.

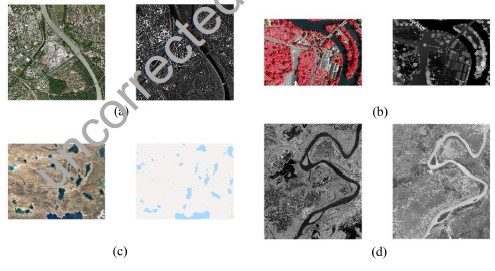

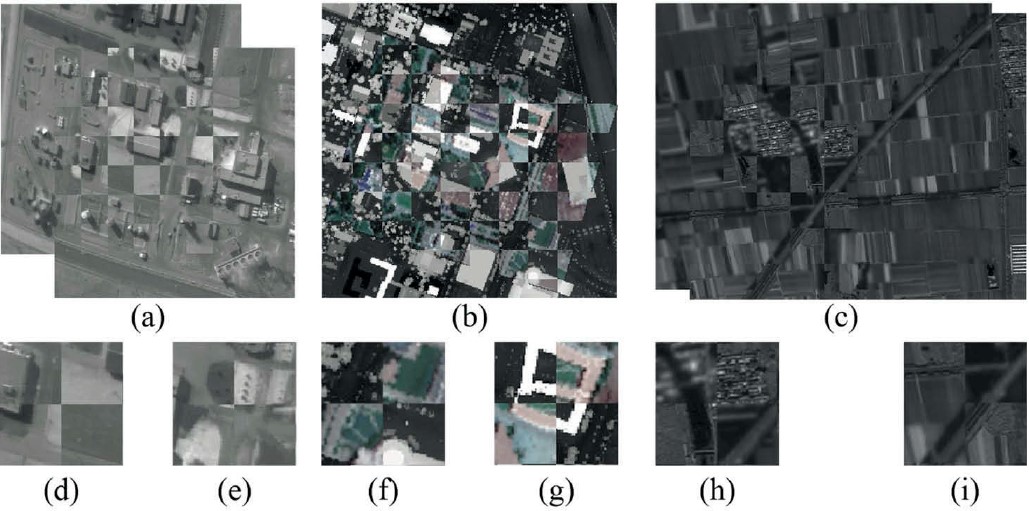

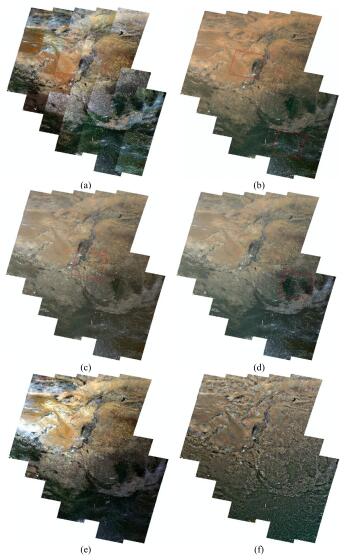

Abstract: Multimodal image matching is a core basis for information fusion, change detection, and image-based navigation. However, multimodal images may simultaneously suffer from severe nonlinear radiation distortion (NRD) and complex geometric differences, which pose great challenges to existing methods. Although deep learning-based methods had shown potential in image matching, they mainly focus on same-source images or single types of multimodal images such as optical-synthetic aperture radar (SAR). One of the main obstacles is the lack of public data for different types of multimodal images. In this paper, we make two major contributions to the community of multimodal image matching: First, we collect six typical types of images, including optical-optical, optical-infrared, optical-SAR, optical-depth, optical-map, and nighttime, to construct a multimodal image dataset with a total of 1200 pairs. This dataset has good diversity in image categories, feature classes, resolutions, geometric variations, etc. Second, we propose a scale and rotation invariant feature transform (SRIF) method, which achieves good matching performance without relying on data characteristics. This is one of the advantages of our SRIF over deep learning methods. SRIF obtains the scales of FAST keypoints by projecting them into a simple pyramid scale space, which is based on the study that methods with/without scale space have similar performance under small scale change factors. This strategy largely reduces the complexity compared to traditional Gaussian scale space. SRIF also proposes a local intensity binary transform (LIBT) for SIFT-like feature description, which can largely enhance the structure information inside multimodal images. Extensive experiments on these 1200 image pairs show that our SRIF outperforms current state-of-the-arts by a large margin, including RIFT, CoFSM, LNIFT, and MS-HLMO. Both the created dataset and the code of SRIF will be publicly available in https://github.com/LJY-RS/SRIF [full text] [link]

-

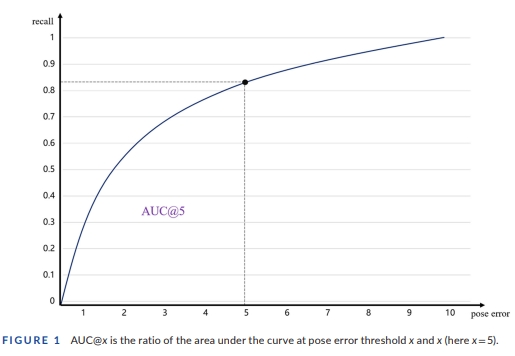

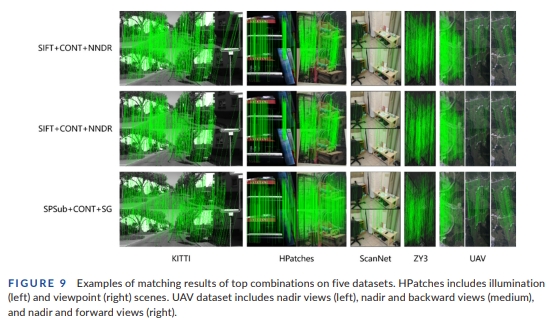

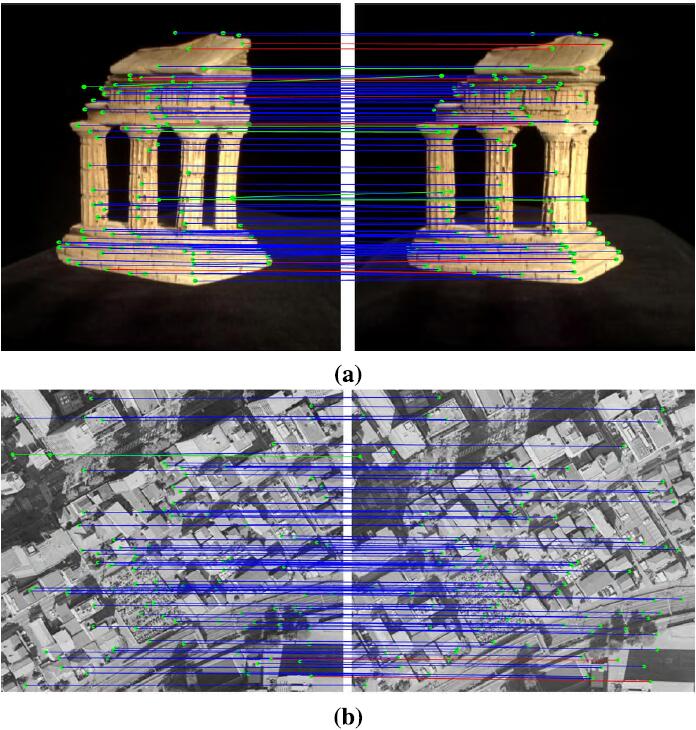

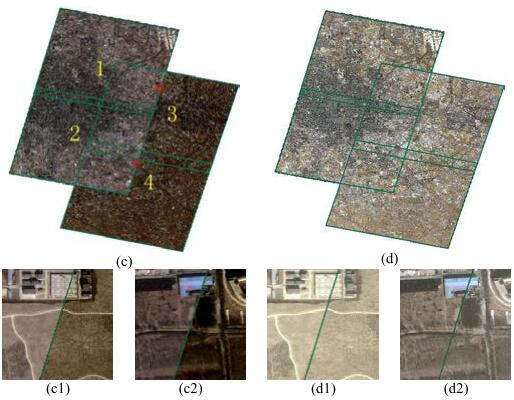

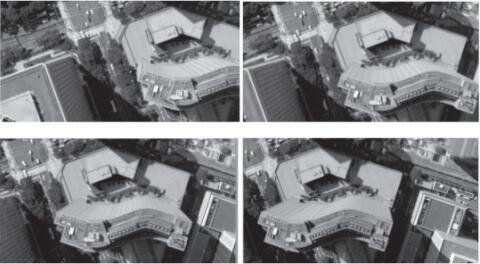

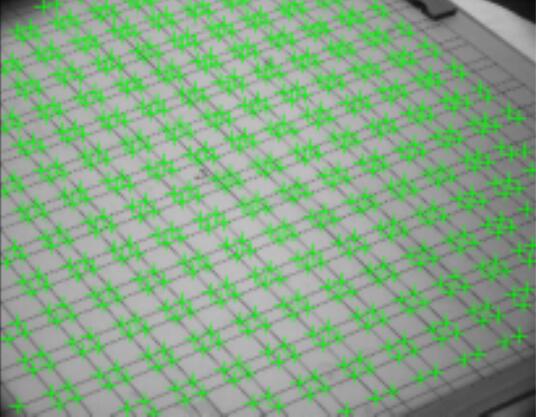

Shunping Ji, Chang Zeng, , Yulin Duan. (2023) An Evaluation of Conventional and Deep Learning-Based Image-Matching Methods on Diverse Datasets. In: The Photogrammetric Record, 38(182): 137-159.

Abstract: Image matching plays an important role in photogrammetry, computer vision and remote sensing. Modern deep learning-based methods have been proposed for image matching; however, whether they will surpass and take the place of the conventional handcrafted methods in the remote sensing field still remains unclear. A comprehensive evaluation on stereo remote sensing images is also lacking. This paper comprehensively evaluates the performance of conventional and deep learning‐based image-matching methods by dividing the matching process into feature point extraction, description and similarity measure on various datasets, including images captured from close‐range indoor and outdoor scenarios, unmanned aerial vehicles (UAVs) and satellite platforms. Different combinations of the three steps are evaluated. The experimental results reveal that, first, the performance of the different combinations varies between individual datasets, and it is difficult to determine the best combination. Second, by using more comprehensive indicators on all of the datasets, that is, the average rank and absolute rank, the combination of scale‐invariant feature transform (SIFT), ContextDesc and the nearest neighbour distance ratio (NNDR), and also the original SIFT, achieve the best results, and are recommended for use in remote sensing. Third, the deep learning‐based Sub‐SuperPoint extractor obtains a good performance, and is second only to SIFT. The learning based ContextDesc descriptor is as effective as the SIFT descriptor, and the learning based SuperGlue matcher is not as stable as NNDR, but leads to a few top‐performing combinations. Finally, the handcrafted methods are generally faster than the deep learning‐based methods, but the efficiency of the latter is acceptable. We conclude that although a full deep learning‐based method/combination has not yet beaten the conventional methods, there is still much room for improvement with the deep learning‐based methods because large‐scale aerial and satellite training datasets remain to be constructed, and specific methods for remote sensing images remain to be developed. The performance of the different combinations of feature extractor, descriptor and similarity measure varies between individual datasets. The combination of SIFT, ContextDesc and NNDR, and also the original SIFT, achieve the best results when using more comprehensive indicators on all the datasets. For extractor, the learning based Sub‐SuperPoint is second only to SIFT; for descriptor, learning‐based ContextDesc is as effective as the SIFT descriptor; and for matcher, learning‐based SuperGlue is not as stable as NNDR. [full text] [link]

-

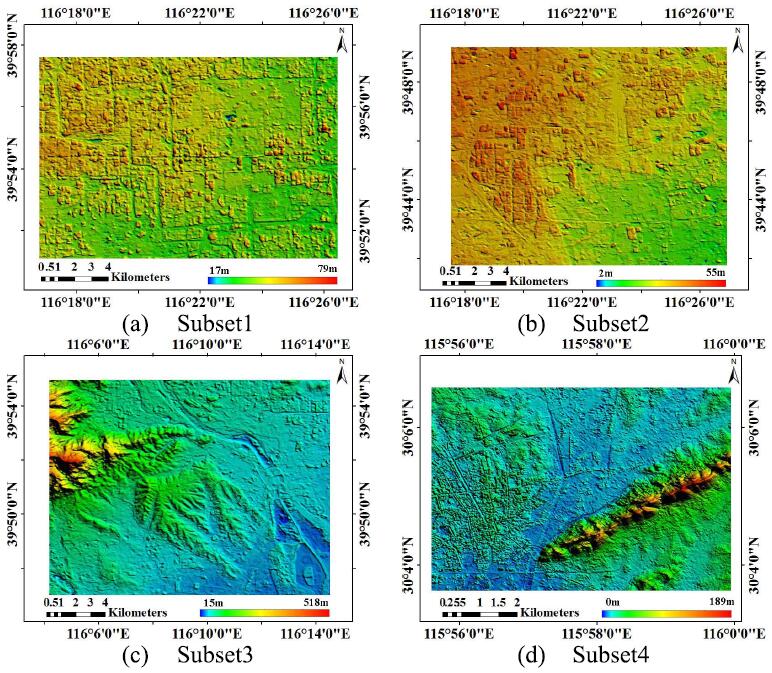

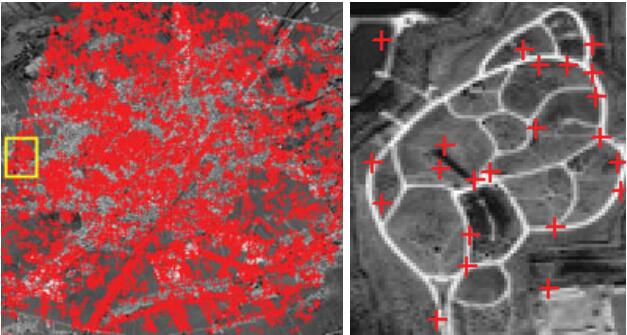

Zhongbin Li, , Mengqiu Wang. (2023) Solar Energy Projects Put Food Security at Risk. In: Science, 381 (6659).

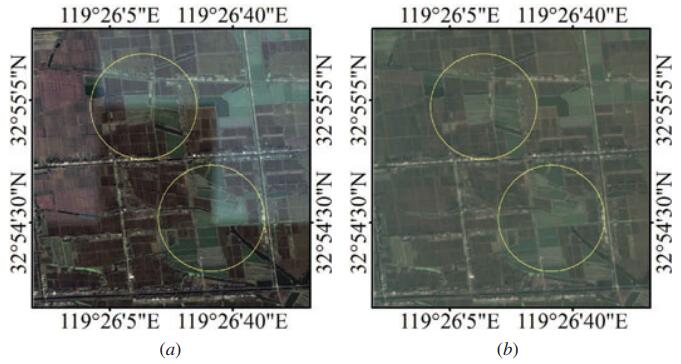

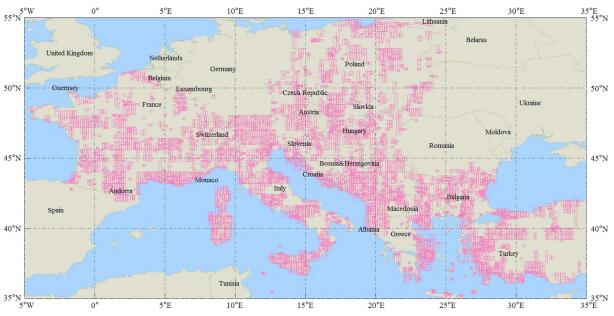

Abstract: 18 AUGUST 2023• VOL 381 ISSUE 6659 741 SCIENCE science. org built on farmland, threatening food security (2, 3). Given the ambitious climate pledges of signatory countries to the Paris Agreement, the area of land required to deploy global solar photovoltaics in the coming decades is expected to rise (4). Governments must act now to mitigate the fierce competition for land between solar energy and crops. Solar energy projects have encroached on farmland across the Northern Hemisphere (3). In 2017 alone, China deployed photovoltaic panels on about 100 km2 of farmlands in the North China Plain (3), one of China's most important agricultural regions. Solar photovoltaic panels have also been deployed over deserts, abandoned mines (5), artificial canals (6), reservoirs (7), and rooftops (8), but these options are less attractive to developers because they are more scarce, more unstable, or more expensive than farmlands. To ensure national food security, some countries have released strict farmland protection regulations [eg, China's Basic Farmland Protection Regulations in 1994, Germany's Federal Regional Planning Act in 1997, and South Korea's Farmland Act in 1994 (9)]. However, solar energy investors and developers continue to occupy farmland illegally (10). Local authorities provide inadequate enforcement, allowing development to proceed at the expense of agriculture. Mitigating solar energy's land competition will require technological innovation and more sustainable deployment strategies. For example, agrivoltaic systems have been proposed that would allow crops to grow under solar panels (11). However, the solar panels hinder mechanized farming and harvesting, and the solar photovoltaics need to be deployed at a position much higher than crops, making the project more expensive. Scientists have also developed foldable solar cells that can be integrated into buildings (12). Until these technologies are cost-effective and scalable, governments should preferentially use unproductive lands for large-scale photovoltaic deployment, prevent installations on finite arable land, and provide stricter enforcement of farmland protection policies. Satellite remote sensing technologies should be used to closely monitor solar photovoltaic panels' illegal farmland encroachment and quantify their impacts on food production. Illegally deployed solar photovoltaics should be demolished so that farmland can be restored. Governments, corporations, and nonprofit organizations should also provide funding to scientists to research and develop cost-effective, ecofriendly, energy-efficient solar cells, including agrivoltaic technology. Scientists should also work to better understand the adverse and unintended consequences of large-scale solar photovoltaic deployment to ensure that the technology provides net benefits in the future. [full text] [link]

-

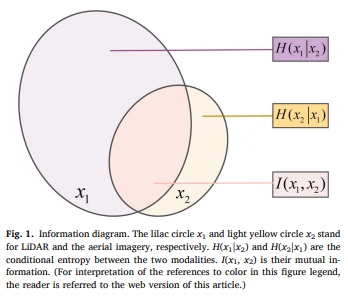

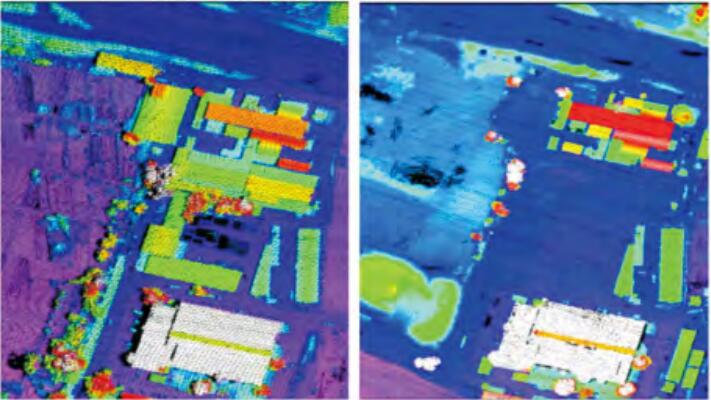

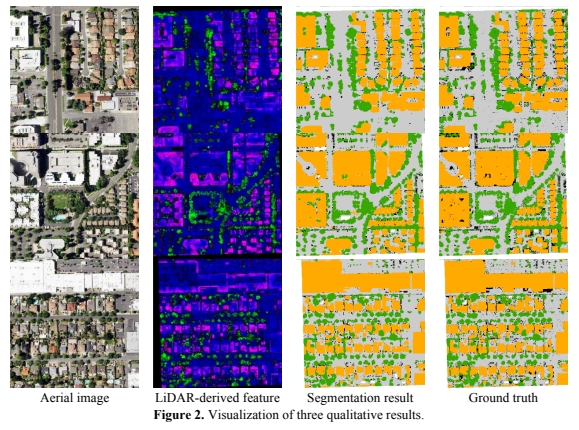

Yameng Wang, Yi Wan, , Bin Zhang, Zhi Gao. (2023) Imbalance Knowledge-driven Multi-modal Network for Land-cover Semantic Segmentation using Aerial Images and LiDAR Point Clouds. In: ISPRS Journal of Photogrammetry and Remote Sensing 202: 385-404.

Abstract: Despite the good results that have been achieved in unimodal segmentation, the inherent limitations of individual data increase the difficulty of achieving breakthroughs in performance. For that reason, multi-modal learning is increasingly being explored within the field of remote sensing. The present multi-modal methods usually map high-dimensional features to low-dimensional spaces as a preprocess before feature extraction to address the nonnegligible domain gap, which inevitably leads to information loss. To address this issue, in this paper we present our novel Imbalance Knowledge-Driven Multi-modal Network (IKD-Net) to extract features from multi-modal heterogeneous data of aerial images and LiDAR directly. IKD-Net is capable of mining imbalance information across modalities while utilizing a strong modal to drive the feature map refinement of the weaker ones in the global and categorical perspectives by way of two sophisticated plug-and-play modules: the Global Knowledge-Guided (GKG) and Class Knowledge-Guided (CKG) gated modules. The whole network then is optimized using a joint loss function. While we were developing IKD-Net, we also established a new dataset called the National Agriculture Imagery Program and 3D Elevation Program Combined dataset in California (N3C-California), which provides a particular benchmark for multi-modal joint segmentation tasks. In our experiments, IKD-Net outperformed the benchmarks and state-of-the-art methods both in the N3C-California and the small-scale ISPRS Vaihingen dataset. IKD-Net has been ranked first on the real-time leaderboard for the GRSS DFC 2018 challenge evaluation until this paper's submission. Our code and N3C-California dataset are available at https://github.com/wymqqq/IKDNet-pytorch. [full text] [link]

-

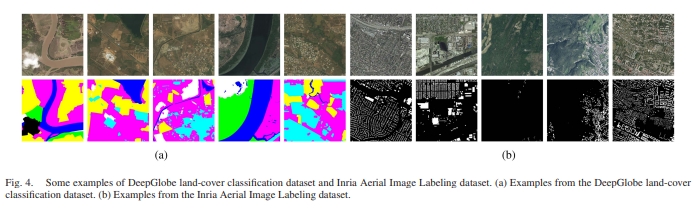

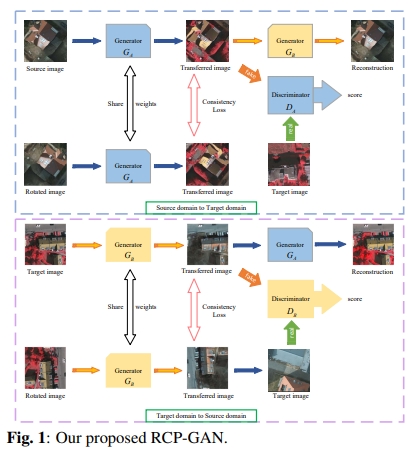

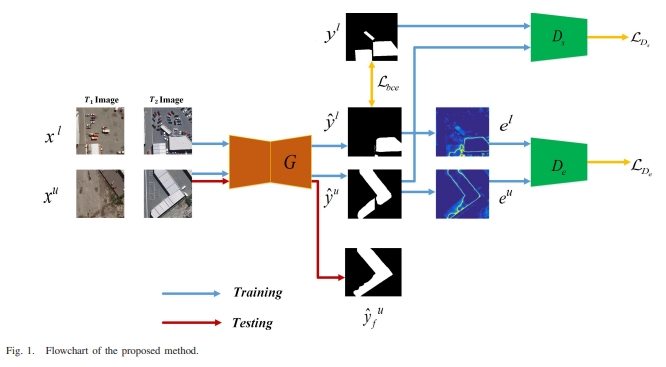

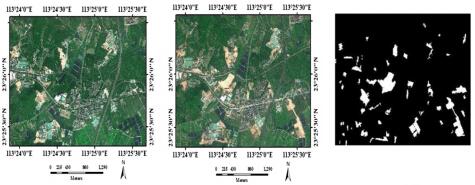

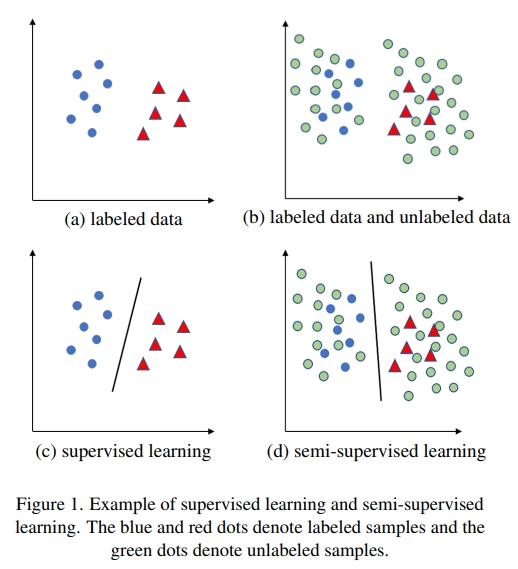

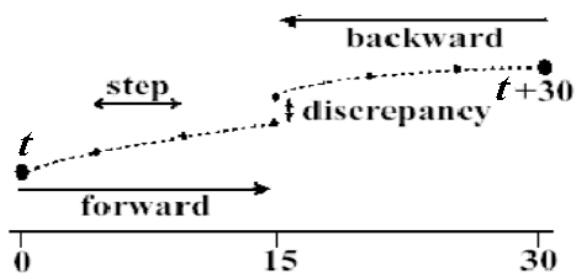

Bin Zhang, , Yansheng Li, Yi Wan, Haoyu Guo, Zhi Zheng, Kun Yang. (2023) Semi-Supervised Deep Learning via Transformation Consistency Regularization for Remote Sensing Image Semantic Segmentation. In: IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 16: 5782-5796.

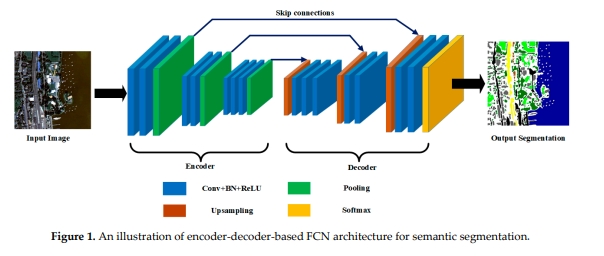

Abstract: Deep convolutional neural networks (CNNs) have gotten a lot of press in the last several years, especially in domains like computer vision (CV) and remote sensing (RS). However, achieving superior performance with deep networks highly depends on a massive amount of accurately labeled training samples. In real-world applications, gathering a large number of labeled samples is time-consuming and labor-intensive, especially for pixel-level data annotation. This dearth of labels in land-cover classification is especially pressing in the RS domain because high-precision, high-quality labeled samples are extremely difficult to acquire, but unlabeled data is readily available. In this study, we offer a new semi-supervised deep semantic labeling framework for semantic segmentation of high-resolution RS images to take advantage of the limited amount of labeled examples and numerous unlabeled samples. Our model uses transformation consistency regularization (TCR) to encourage consistent network predictions under different random transformations or perturbations. We try three different transforms to compute the consistency loss and analyze their performance. Then, we present a deep semi-supervised semantic labeling technique by using a hybrid transformation consistency regularization (HTCR). A weighted sum of losses, which contains a supervised term computed on labeled samples and an unsupervised regularization term computed on unlabeled data, may be used to update the network parameters in our technique. Our comprehensive experiments on two RS datasets confirmed that the suggested approach utilized latent information from unlabeled samples to obtain more precise predictions and outperformed existing semi-supervised algorithms in terms of performance. Our experiments further demonstrated that our semi-supervised semantic labeling strategy has the potential to partially tackle the problem of limited labeled samples for high-resolution RS image land-cover segmentation. [full text] [link]

-

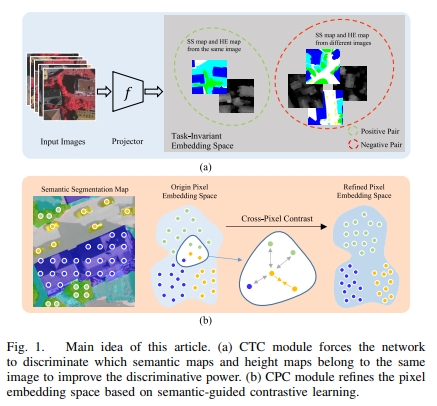

Zhi Gao, Wenbo Sun, Yao Lu, Yichen Zhang, Weiwei Song, , Ruifang Zhai. (2023) Joint Learning of Semantic Segmentation and Height Estimation for Remote Sensing Image Leveraging Contrastive Learning. In: IEEE Transactions on Geoscience and Remote Sensing, 61: 1-15.

Abstract: Semantic segmentation (SS) and height estimation (HE) are two critical tasks in remote sensing scene understanding that are highly correlated with each other. To address both the tasks simultaneously, it is natural to consider designing a unified deep learning model that aims to improve performance by jointly learning complementary information among the associated tasks. In this article, we learn the two tasks jointly under a deep multitask learning (MTL) framework and propose two novel objective functions, called cross-task contrastive (CTC) loss and cross-pixel contrastive (CPC) loss, respectively, to enhance MTL performance through contrastive learning. Specifically, the CTC loss is designed to maximize the mutual information of different task features and enforce the model to learn the consistency between SS and height estimation. In addition, our method goes beyond previous approaches that only apply contrastive learning at the instance level. Instead, we design a pixelwise contrastive loss function that pulls together pixel embeddings belonging to the same semantic class, while pushing apart pixel embeddings from different semantic classes. Furthermore, we find that this semantic-guided contrastive loss simultaneously improves the performance of the HE task. Our proposed approach is simple and effective and does not introduce any additional overhead to the model during the testing phase. We extensively evaluate our method on the Vaihingen and Potsdam datasets, and the experimental results demonstrate that our approach significantly outperforms the state-of-the-art methods in both HE and SS. [full text] [link]

-

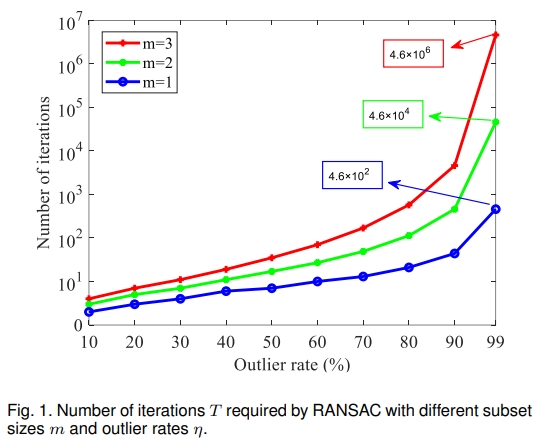

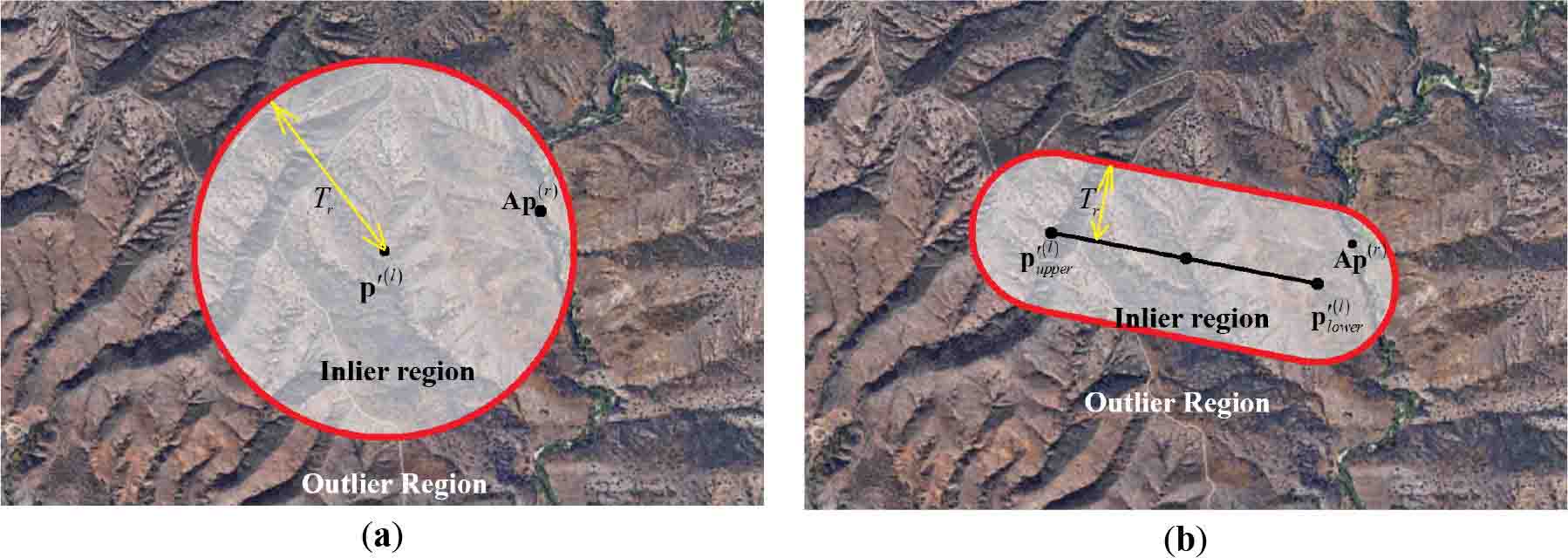

Jiayuan Li, Pengcheng Shi, Qingwu Hu, . (2023) QGORE: Quadratic-Time Guaranteed Outlier Removal for Point Cloud Registration. In: IEEE Transactions on Pattern Analysis and Machine Intelligence.

Abstract: With the development of 3D matching technology, correspondence-based point cloud registration gains more attention. Unfortunately, 3D keypoint techniques inevitably produce a large number of outliers, i.e., outlier rate is often larger than 95%. Guaranteed outlier removal (GORE) Bustos and Chin has shown very good robustness to extreme outliers. However, the high computational cost (exponential in the worst case) largely limits its usages in practice. In this paper, we propose the first O(N2) time GORE method, called quadratic-time GORE (QGORE), which preserves the globally optimal solution while largely increases the efficiency. QGORE leverages a simple but effective voting idea via geometric consistency for upper bound estimation, which achieves almost the same tightness as the one in GORE. We also present a one-point RANSAC by exploring “rotation correspondence” for lower bound estimation, which largely reduces the number of iterations of traditional 3-point RANSAC. Further, we propose a lpp -like adaptive estimator for optimization. Extensive experiments show that QGORE achieves the same robustness and optimality as GORE while being 1 ∼ 2 orders faster. The source code will be made publicly available. [full text] [link]

-

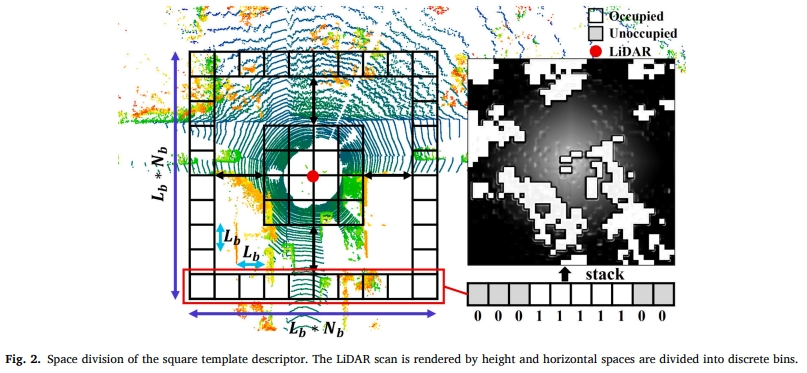

Pengcheng Shi, Jiayuan Li, . (2023) LiDAR Localization at 100 FPS: A Map-aided and Template Descriptor-based Global Method. In: International Journal of Applied Earth Observation and Geoinformation, 120: 103336.

Abstract: With the development of multi-beam Light Detection and Ranging (LiDAR) sensors, fast and accurate LiDAR-based localization has become a crucial issue in robotics and autonomous driving. However, balancing accuracy and efficiency remains challenging in existing methods. In this paper, we propose a super-fast LiDAR global localization approach that can achieve state-of-the-art (SOTA) accuracy with superior efficiency. Our method leverages template descriptors to capture structural environments and approximates the vehicle’s position via map candidate points. Additionally, we create an offline map database to evenly simulate vehicle orientations. We design a loss function to improve localization accuracy. We extensively evaluated the proposed method in public KITTI outdoor sequences and self-collected indoor datasets. The experimental results show that our approach can run at close to 100 frames per second (FPS) on a single-thread CPU, which is much faster than current SOTA methods. Our average absolute translation errors (ATEs) are 0.20m (indoor) and 0.44m (outdoor), and the average localization success rates are 93% (indoor) and 90% (outdoor). The average localization success rates can exceed 97% in large outdoor scenarios with fine-tuned parameters. The source code will be available in https://github.com/ShiPC-AI. [full text] [link]

-

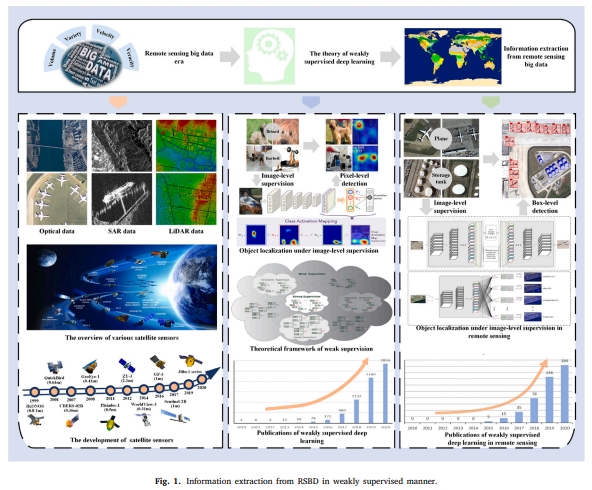

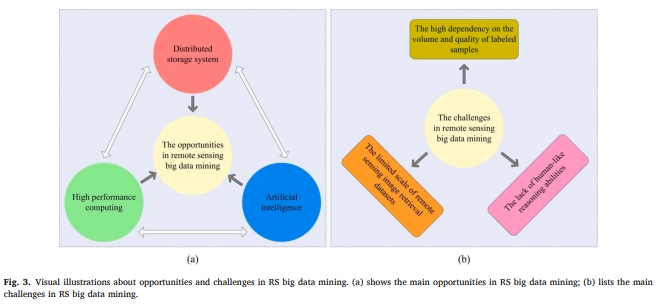

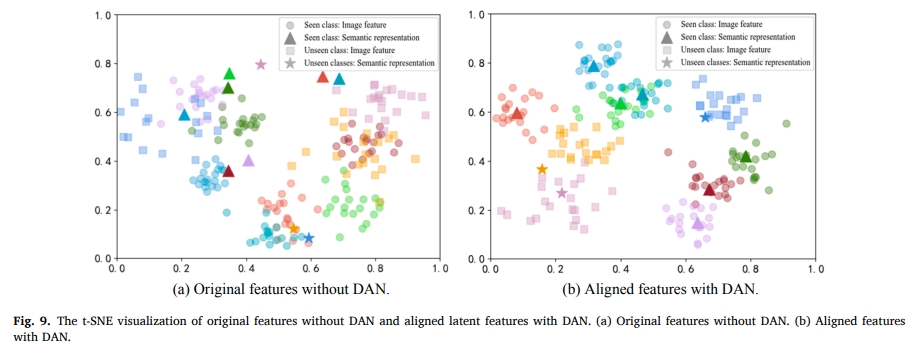

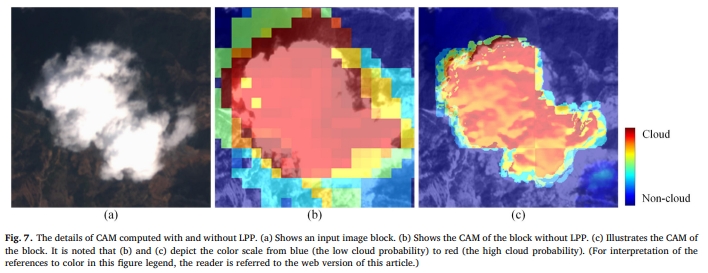

Yansheng Li, Xinwei Li, , Daifeng Peng, Lorenzo Bruzzone. (2023) Cost-Efficient Information Extraction From Massive Remote Sensing Data: When Weakly Supervised Deep Learning Meets Remote Sensing Big Data. In: International Journal of Applied Earth Observation and Geoinformation, 120: 103345.

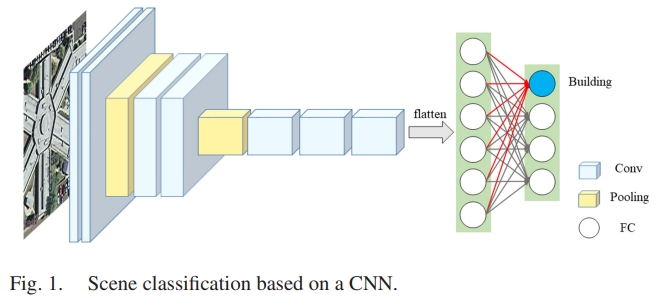

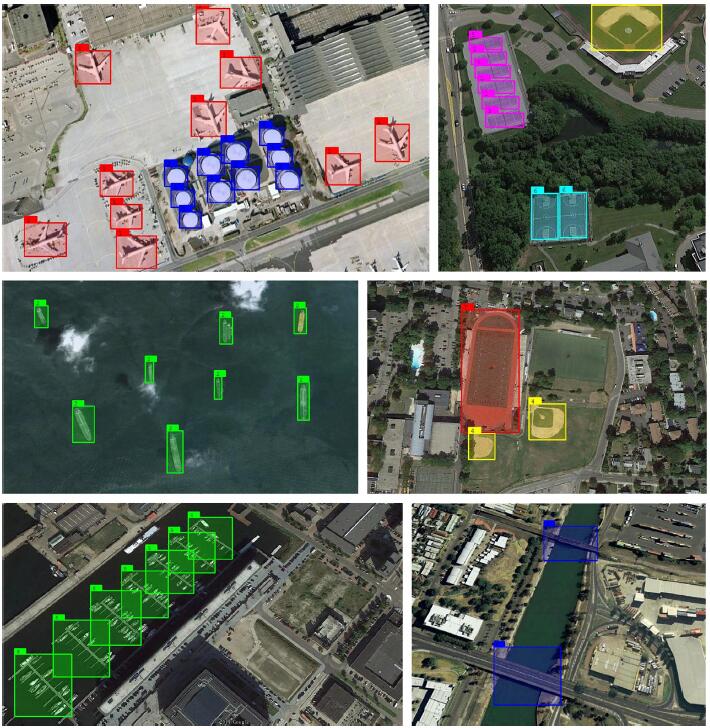

Abstract: With many platforms and sensors continuously observing the earth surface, the large amount of remote sensing data presents a big data challenge. While remote sensing data acquisition capability can fully meet the requirements of many application domains, there is still a need to further explore how to efficiently mine the useful information from remote sensing big data (RSBD). Many researchers in the remote sensing community have introduced deep learning in the process of RSBD, and deep learning-based methods have achieved better performance compared with traditional methods. However, there are still substantial obstacles to the application of deep learning in remote sensing. One of the major challenges is the generation of pixel-level labels with high quality for training samples, which is essential to deep learning models. Weakly supervised deep learning (WSDL) is a promising solution to address this problem as WSDL can utilize greedily labeled datasets that are easy to collect but not ideal to train the deep networks. In this review, we summarize the achievements of WSDL-driven cost-efficient information extraction from RSBD. We first analyze the opportunities and challenges of information extraction from RSBD. Based on the analysis of the theoretical foundations of WSDL in the computer vision (CV) domain, we conduct a survey on the WSDL-based information extraction methods under the data characteristic and task demand of RSBD in four different tasks: (i) scene classification, (ii) object detection, (iii) semantic segmentation and (iv) change detection. Finally, potential research directions are outlined to guide researchers to further exploit WSDL-based information extraction from RSBD. [full text] [link]

-

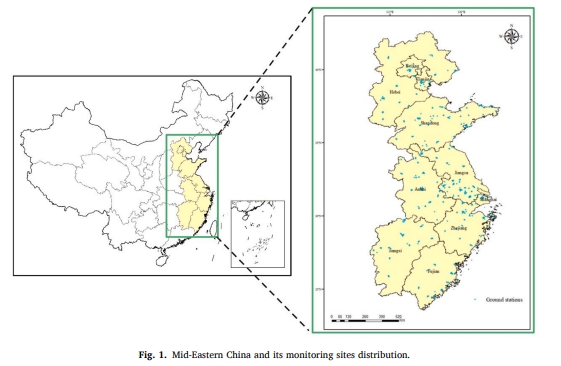

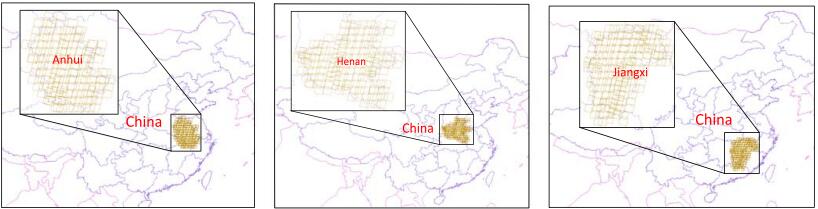

, Wenpin Wu, Yiliang Li, Yansheng Li. (2023) An Investigation of PM2.5 Concentration Changes in Mid-Eastern China Before and After COVID-19 Outbreak. In: Environment International, 175, 107941.

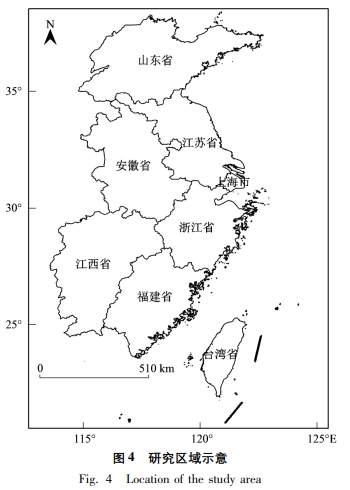

Abstract: With the Chinese government revising ambient air quality standards and strengthening the monitoring and management of pollutants such as PM2.5, the concentrations of air pollutants in China have gradually decreased in recent years. Meanwhile, the strong control measures taken by the Chinese government in the face of COVID-19 in 2020 have an extremely profound impact on the reduction of pollutants in China. Therefore, investigations of pollutant concentration changes in China before and after COVID-19 outbreak are very necessary and concerning, but the number of monitoring stations is very limited, making it difficult to conduct a high spatial density investigation. In this study, we construct a modern deep learning model based on multi-source data, which includes remotely sensed AOD data products, other reanalysis element data, and ground monitoring station data. Combining satellite remote sensing techniques, we finally realize a high spital density PM2.5 concentration change investigation method, and analyze the seasonal and annual, the spatial and temporal characteristics of PM2.5 concentrations in Mid-Eastern China from 2016 to 2021 and the impact of epidemic closure and control measures on regional and provincial PM2.5 concentrations. We find that PM2.5 concentrations in Mid-Eastern China during these years is mainly characterized by “north-south superiority and central inferiority”, seasonal differences are evident, with the highest in winter, the second highest in autumn and the lowest in summer, and a gradual decrease in overall concentration during the year. According to our experimental results, the annual average PM2.5 concentration decreases by 3.07 % in 2020, and decreases by 24.53 % during the shutdown period, which is probably caused by China's epidemic control measures. At the same time, some provinces with a large share of secondary industry see PM2.5 concentrations drop by more than 30 %. By 2021, PM2.5 concentrations rebound slightly, rising by 10 % in most provinces. [full text] [link]

-

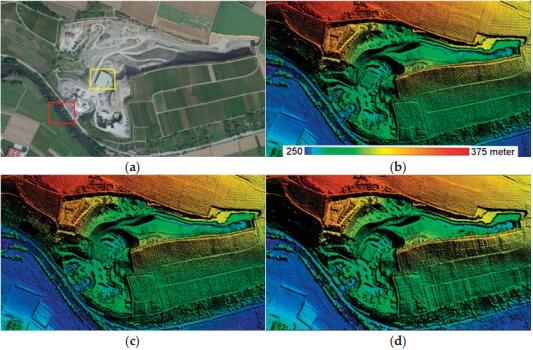

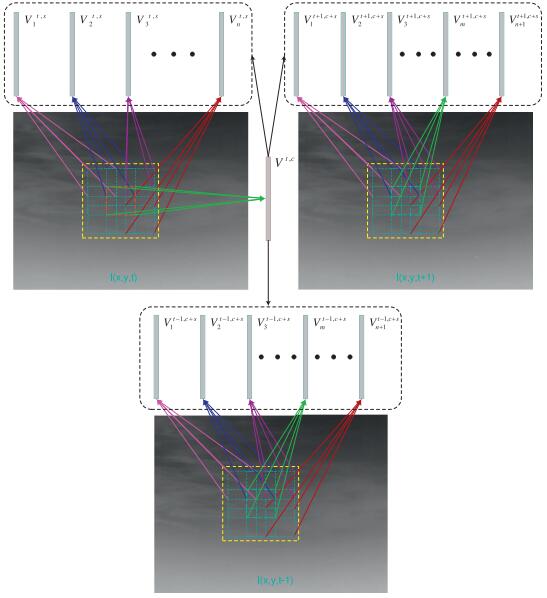

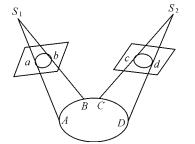

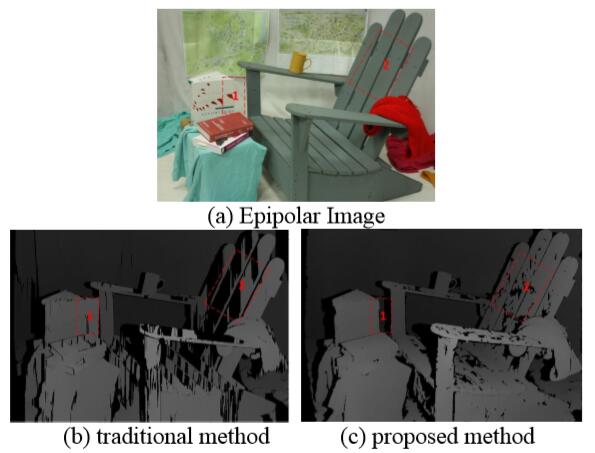

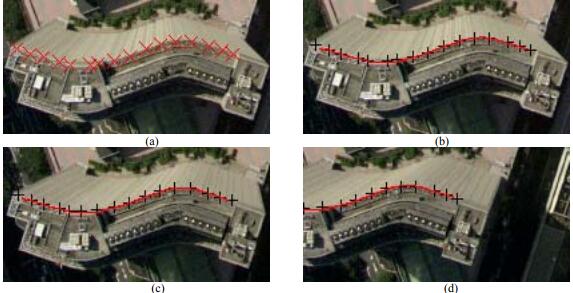

Siyuan Zou, Xinyi Liu, Xu Huang, , Senyuan Wang, Shuang Wu, Zhi Zheng, Bingxin Liu. (2023) Edge-Preserving Stereo Matching Using LiDAR Points and Image Line Features. In: IEEE Geoscience and Remote Sensing Letters, 2023, 20: 6000205.

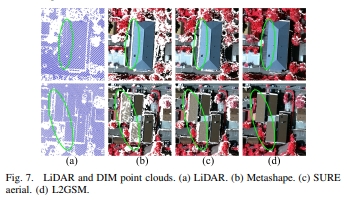

Abstract: This letter proposes a LiDAR and image line-guided stereo matching method (L2GSM), which combines sparse but high-accuracy LiDAR points and sharp object edges of images to generate accurate and fine-structure point clouds. After extracting depth discontinuity lines on the image by using LiDAR depth information, we propose a trilateral update of cost volume and depth discontinuity lines-aware semi-global matching (SGM) strategies to integrate LiDAR data and depth discontinuity lines into the dense matching algorithm. The experimental results for the indoor and aerial datasets show that our method significantly improves the results of the original SGM and outperforms two state-of-the-art LiDAR constraints' SGM methods, especially in recovering the 3-D structure of low-textured and depth discontinuity regions. In addition, the 3-D point clouds generated by our proposed method outperform the LiDAR data and dense matching point clouds generated by Metashape and SURE aerial in terms of completeness and edge accuracy. [full text] [link]

-

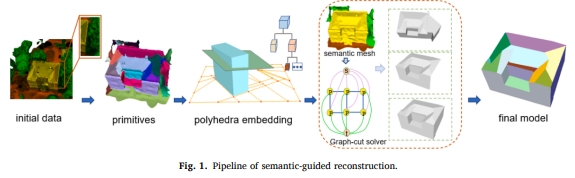

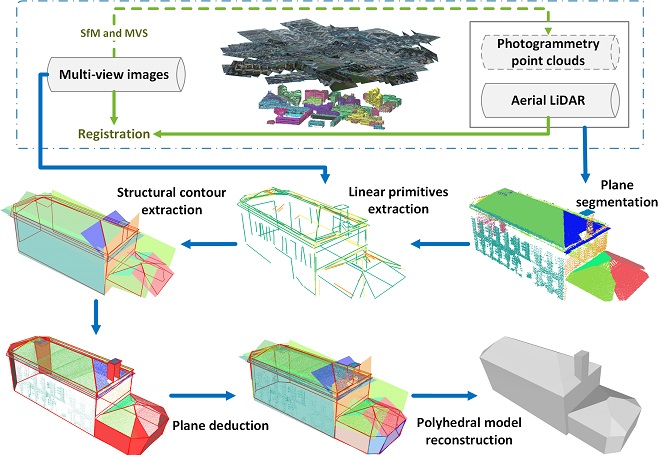

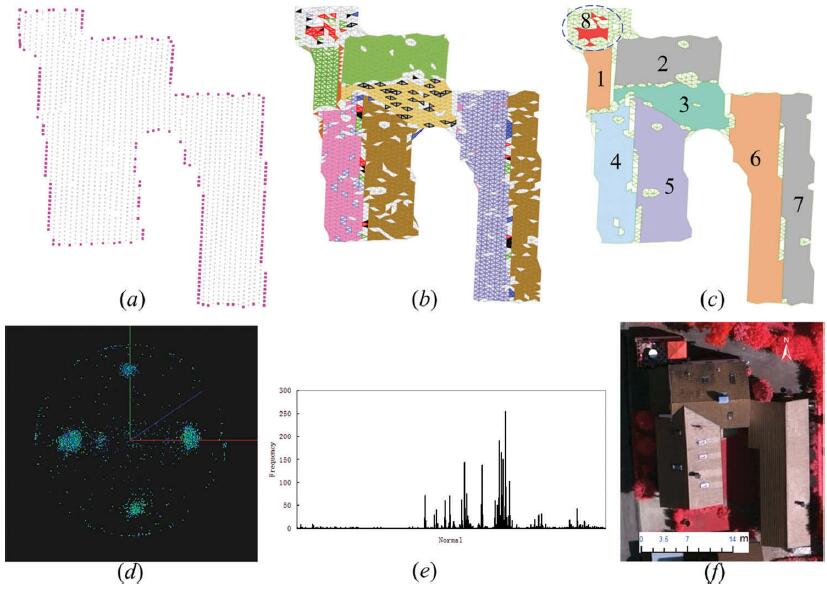

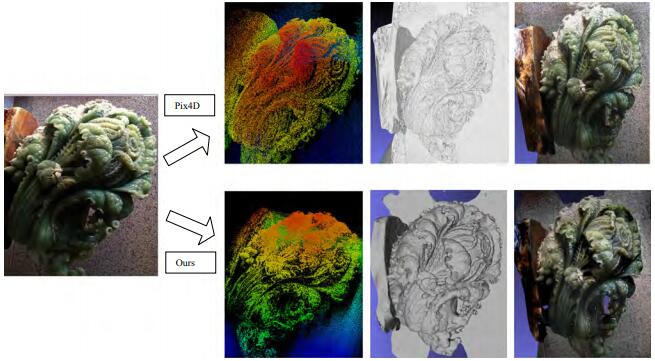

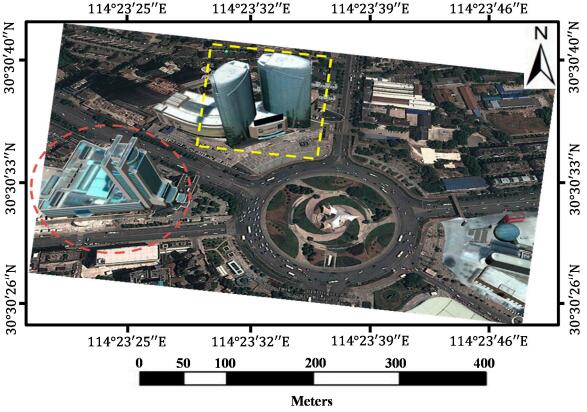

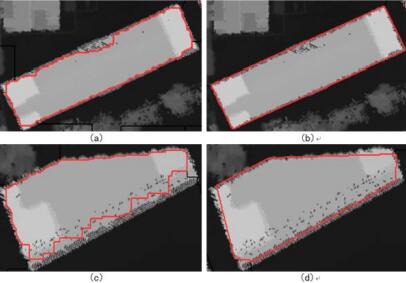

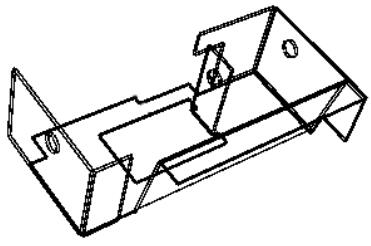

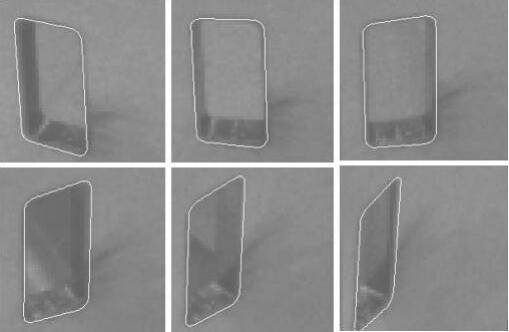

Senyuan Wang, Xinyi Liu, , Jonathan Li, Siyuan Zou, Jipeng Wu, Chuang Tao, Quan Liu, Guorong Cai. (2023) Semantic-guided 3D Building Reconstruction from Triangle Meshes. In: International Journal of Applied Earth Observation and Geoinformation, 119, 103324.

Abstract: Planar primitives tend to be incorrectly detected or incomplete in complex scenes where adhesions exist between different objects, resulting in topology errors in the reconstructed models. We propose a semantic-guided building reconstruction method known as semantic-guided reconstruction (SGR), which is capable of achieving the independence and integrity of building models in two key stages. In the first stage, the space partition is represented by a 2.5D convex cell complex and is capable of restoring planar primitives that are easily lost and can further infer the potential structural adaptivity. The second stage incorporates semantic information into a graph-cut formulation that can assist in the independent reconstruction of buildings while eliminating interference from the surrounding environment. Our experimental results confirmed that the SGR method can authentically reconstruct weakly observed surfaces. Furthermore, qualitative and quantitative evaluations show that SGR is suitable for reconstructing surfaces from insufficient data with semantic and geometric ambiguity or semantic errors and can obtain watertight models considering fidelity, integrity and time complexity. [full text] [link]

-

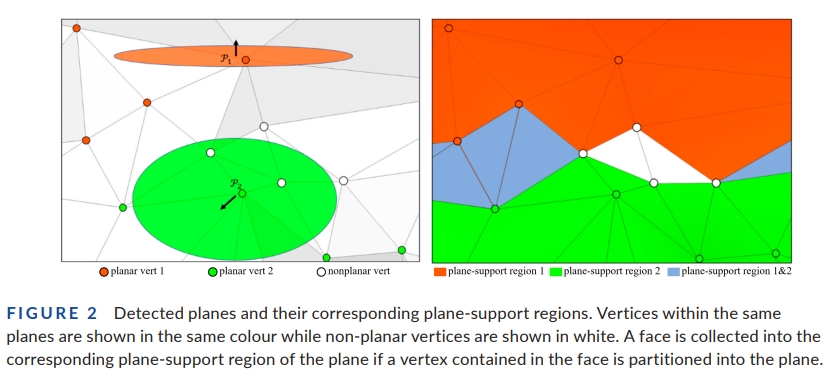

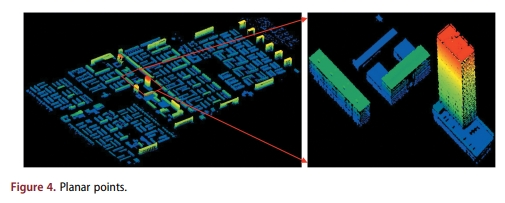

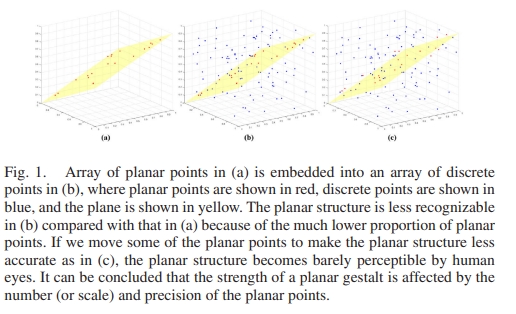

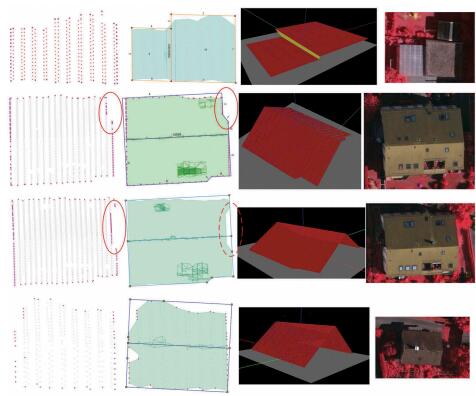

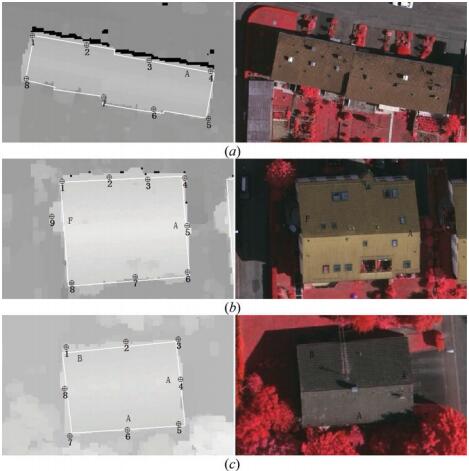

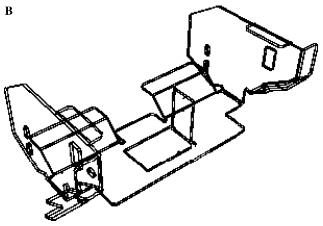

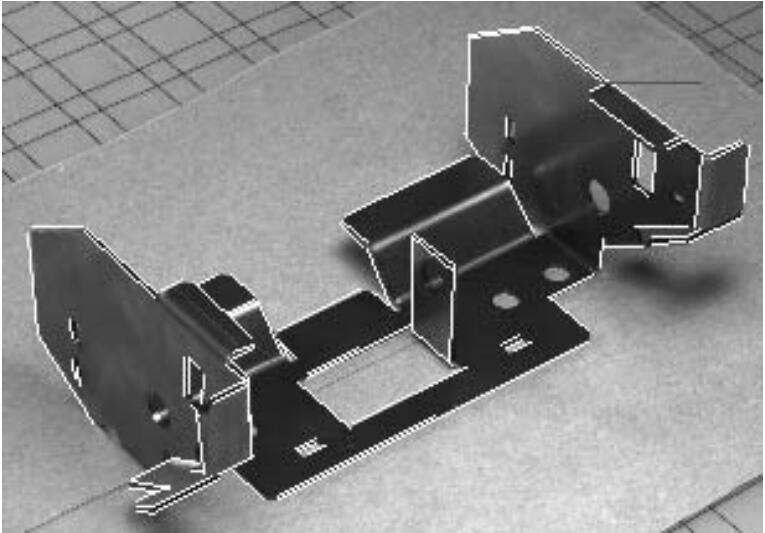

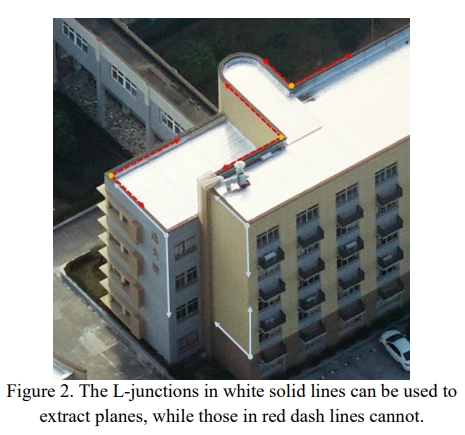

Xinyi Liu, Xianzhang Zhu, , Senyuan Wang, Chen Jia. (2023) Generation of Concise 3D Building Model from Dense Meshes by Extracting and Completing Planar Primitives. In: The Photogrammetric Record, 38(181): 22-46.

Abstract: The generation of a concise building model has been and continues to be a challenge in photogrammetry and computer graphics. The current methods typically focus on the simplicity and fidelity of the model, but those methods either fail to preserve the structural information or suffer from low computational efficiency. In this paper, we propose a novel method to generate concise building models from dense meshes by extracting and completing the planar primitives of the building. From the perspective of probability, we first extract planar primitives from the input mesh and obtain the adjacency relationships between the primitives. Since primitive loss and structural defects are inevitable in practice, we employ a novel structural completion approach to eliminate linkage errors. Finally, the concise polygonal mesh is reconstructed by connectivity-based primitive assembling. Our method is efficient and robust to various challenging data. Experiments on various building models revealed the efficacy and applicability of our method. [full text] [link]

-

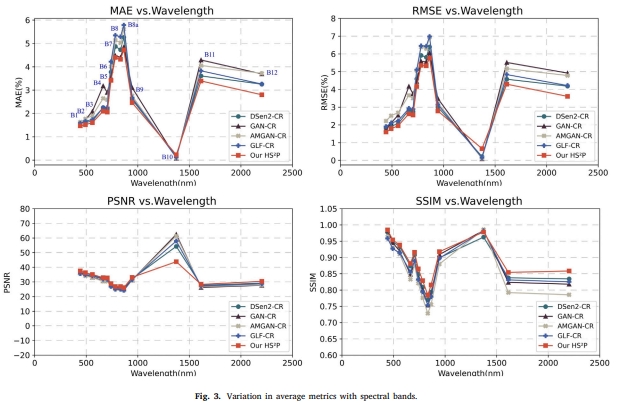

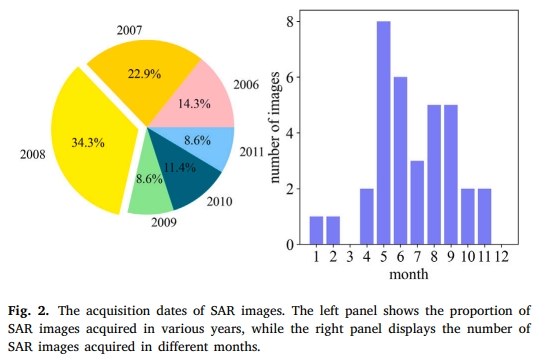

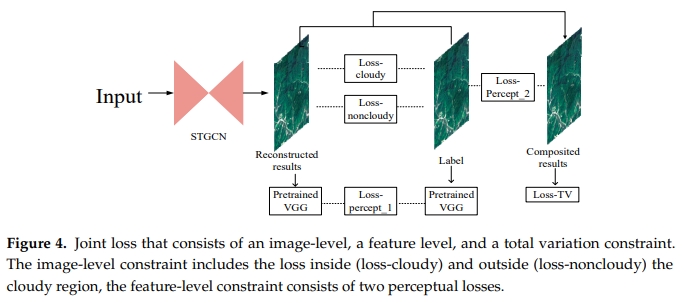

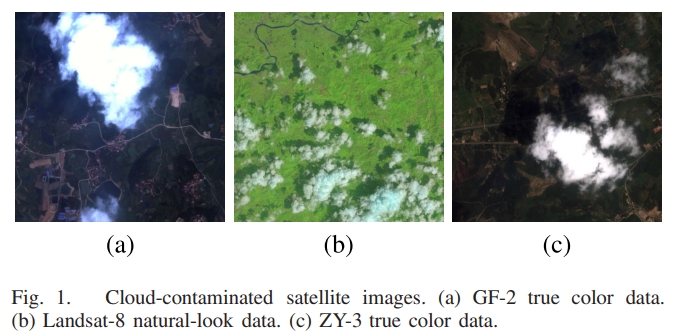

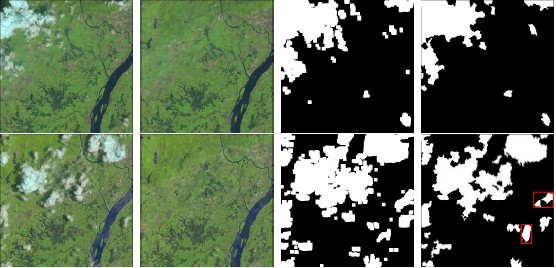

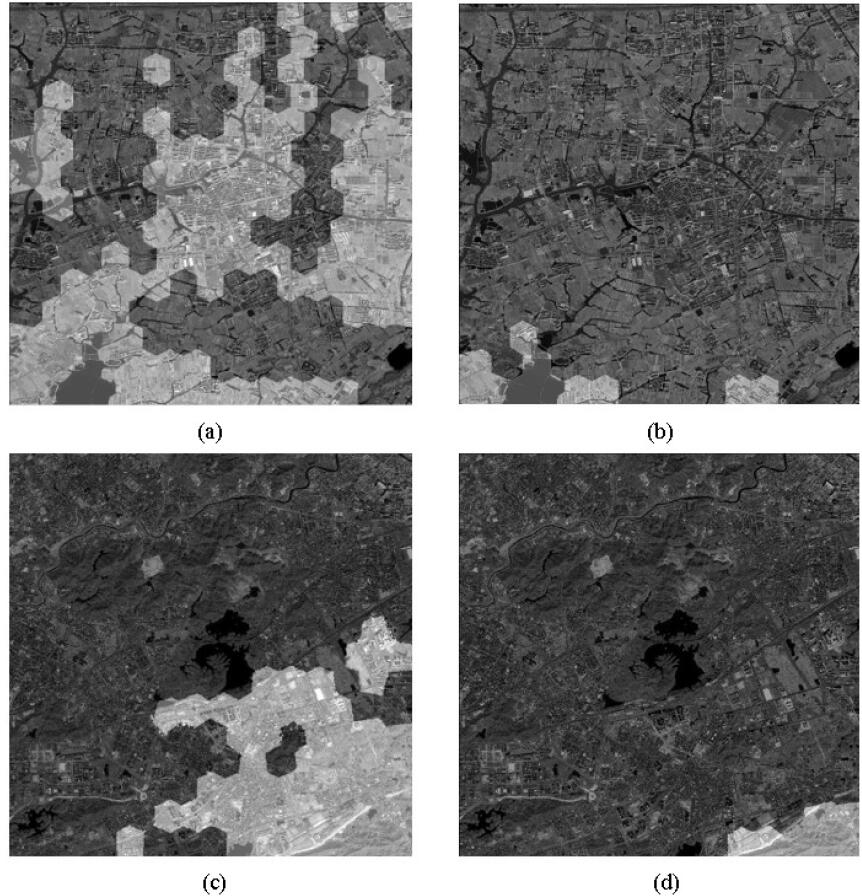

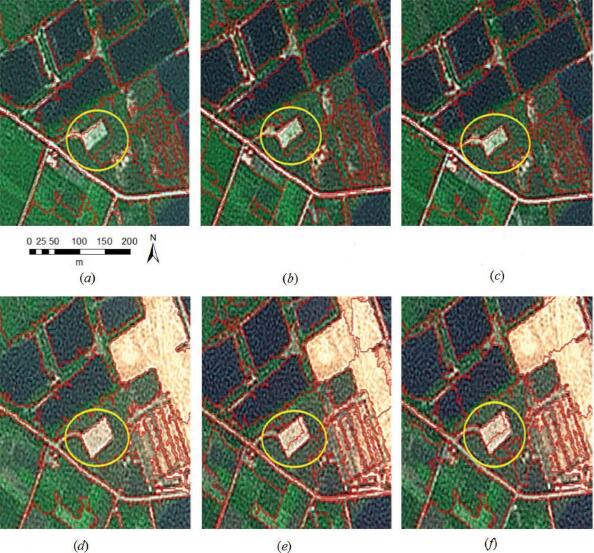

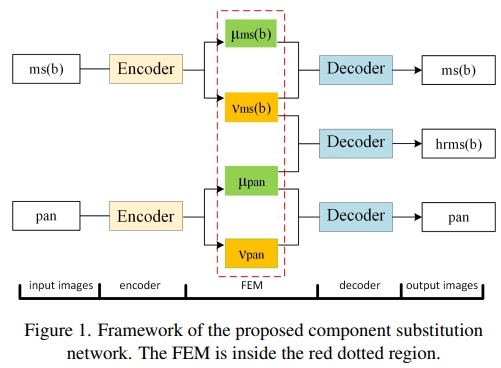

Yansheng Li, Fanyi Wei, , Wei Chen, Jiayi Ma. (2023) HS2P: Hierarchical spectral and structure-preserving fusion network for multimodal remote sensing image cloud and shadow removal. In: Information Fusion, 94: 215-228.

Abstract: Optical remote sensing images are often contaminated by clouds and shadows, resulting in missing data, which greatly hinders consistent Earth observation missions. Cloud and shadow removal is one of the most important tasks in optical remote sensing image processing. Due to the characteristics of active imaging that enable synthetic aperture radar (SAR) to penetrate cloud cover and other climatic conditions, SAR data are extensively utilized to guide optical remote sensing image cloud and shadow removal. Nevertheless, SAR data are highly corrupted by speckle noise, which generates artifact pollution to spectral features extracted from optical images and makes SAR-optical fusion ill-posed to generate cloud and shadow removal results while retaining high spectral fidelity and reasonable spatial structures. To overcome the aforementioned drawbacks, this paper presents a novel hierarchical spectral and structure-preserving fusion network (HS2P), which can recover cloud and shadow regions in optical remote sensing imagery based on the hierarchical fusion of optical and SAR remote sensing imagery. In HS2P, we present a deep hierarchical architecture with stacked residual groups (ResGroups), which progressively constrains the reconstruction. To pursue the adaptive selection of more informative features for fusion and reduce attention to the features with artifacts brought by clouds and shadows in optical data or speckle noise in SAR data, residual blocks with a channel attention mechanism (RBCA) are recommended. Additionally, a novel collaborative optimization loss function is proposed to preserve spectral features while enhancing structural details. Extensive experiments on the publicly open dataset (i.e., SEN12MS-CR) demonstrate that the proposed method can robustly recover diverse ground information in optical remote sensing imagery with various cloud types. Compared with the state-of-the-art cloud and shadow removal methods, our HS2P achieves significant improvements in terms of quantitative and qualitative results. The source code is publicly available at https://github.com/weifanyi515/HS2P. [full text] [link]

-

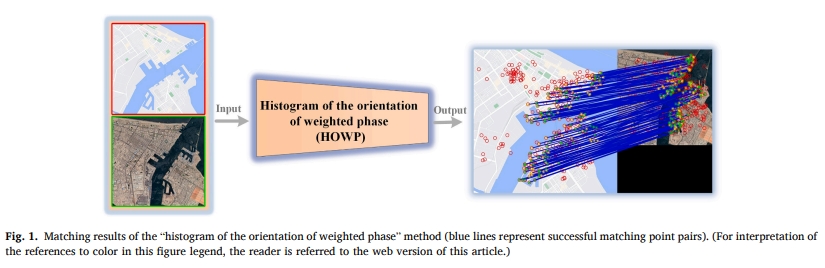

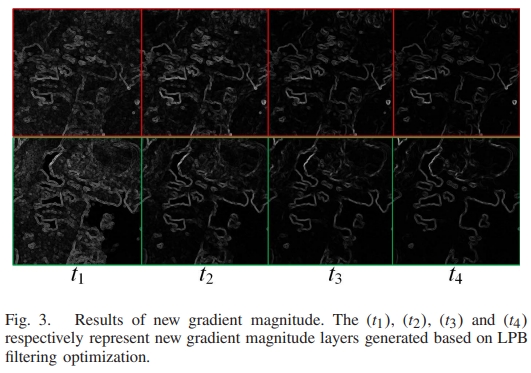

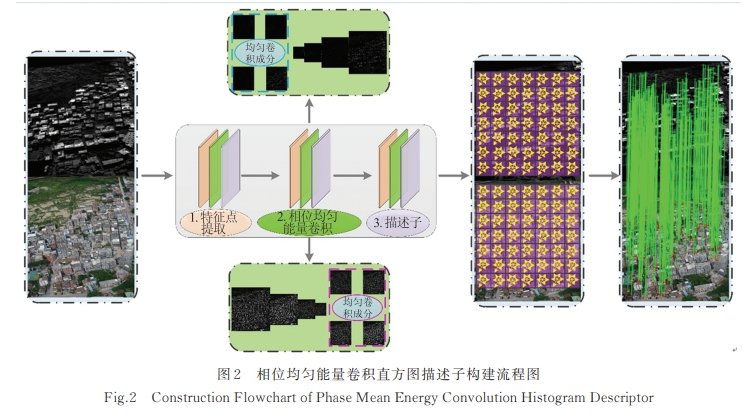

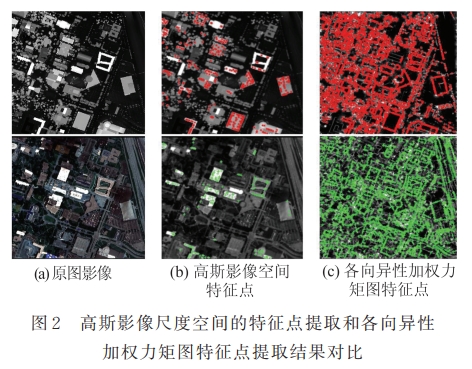

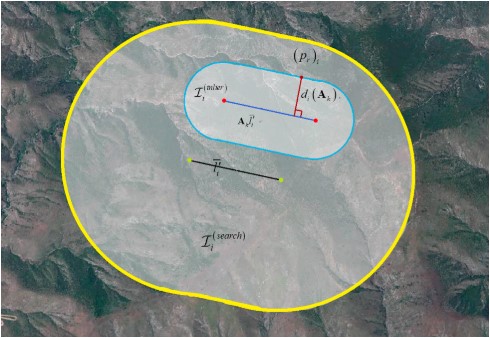

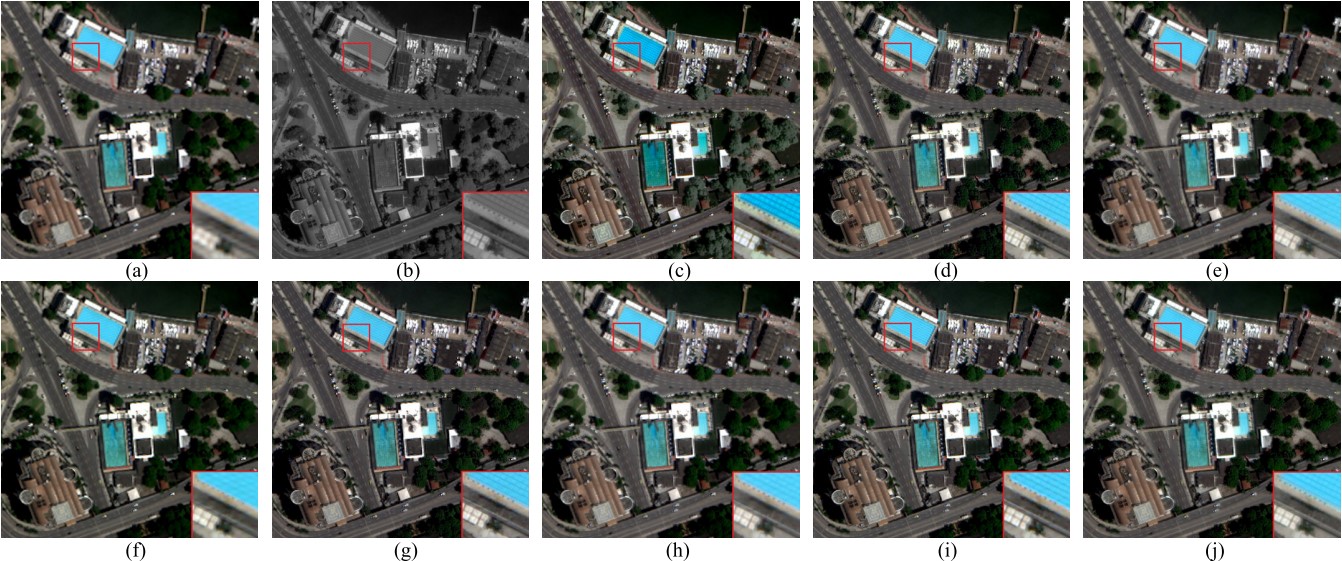

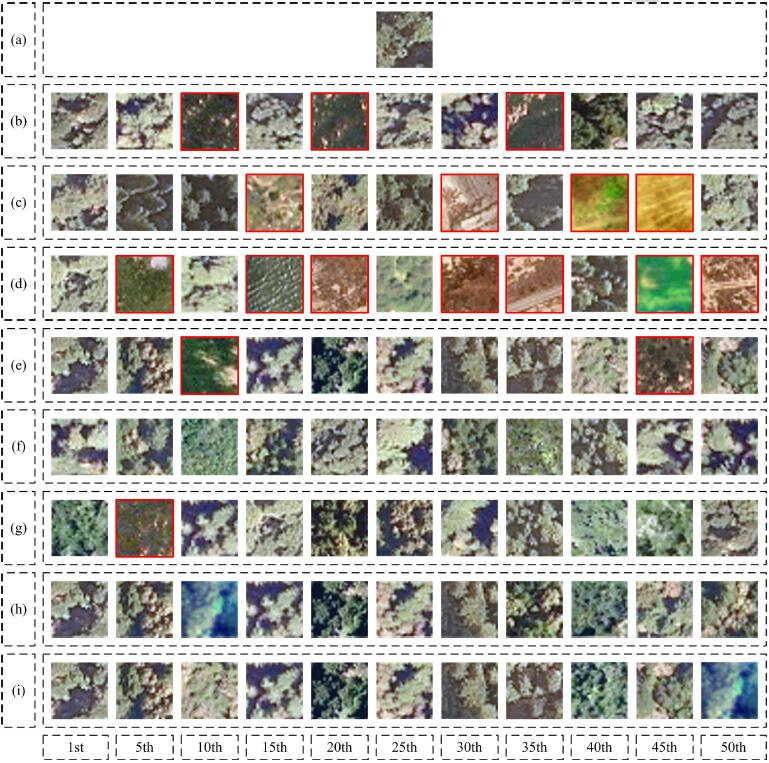

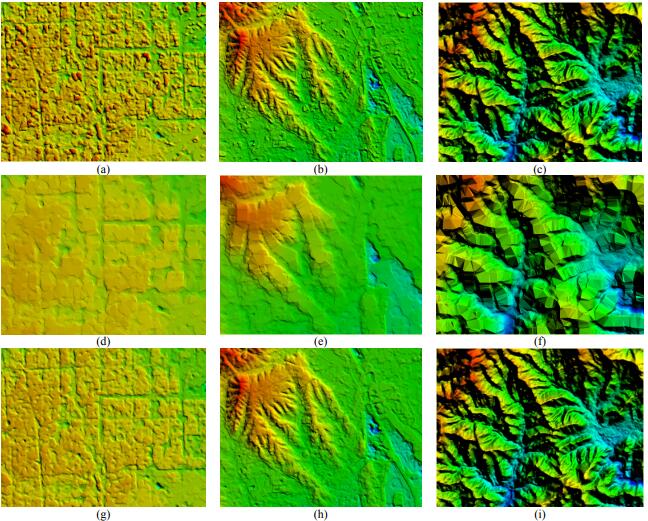

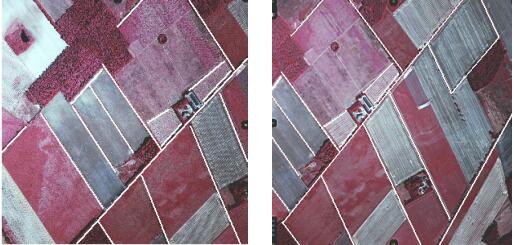

, Yongxiang Yao, Yi Wan, Weiyu Liu, Wupeng Yang, Zhi Zheng, Rang Xiao. (2023) Histogram of the Orientation of the Weighted Phase Descriptor for Multi-modal Remote Sensing Image Matching. In: ISPRS Journal of Photogrammetry and Remote Sensing 196: 1-15.

Abstract: Multi-modal remote sensing image (MRSI) has nonlinear radiation distortion (NRD) and significant contrast differences to which image gradient features are usually sensitive. Although image phase features are more robust against NRD, they might not be much helpful in resolving the problems of directional inversion or phase extreme value mutations that are common in the phase feature calculation. To address these issues, a new MRSI matching method—“histogram of the orientation of weighted phase” (HOWP)—is proposed in this paper. This method distinguishes itself from other methods in three aspects: (1) a feature aggregation strategy is used to optimize feature points by extracting the corner and blob features separately; (2) a novel weighted phase orientation model is established to replace the traditional image gradient orientation features; and (3) a regularization-based log-polar descriptor is constructed to generate robust feature description vectors. To evaluate the performance of the proposed method, we selected 50 sets of typical MRSIs with translation, scale, and rotation differences for comparison with the other four state-of-the-art methods. The results show that our method is more resistant to radiometric distortion and the contrasting differences in MRSIs. It also performs better in tackling the problems of direction reversal and phase extreme value mutation, as evidenced by more, the number of correct matches (NCM). Since the method has improved the average NCM by 1.6-4.5 times, the average success rate by 35.5%, and the average rate of correct matches by 11.1% with an average root of mean-squared error of 1.93 pixels. Moreover, we have put forward an extended version of the HOWP method (Simplified-HOWP) when there is no image rotation, which manifests in an average 0.75 times improvement in NCM of Simplified-HOWP performance over that of the HOWP method. The executable code and test data are linked in https://skyearth.org/publication/project/HOWP/. [full text] [link]

-

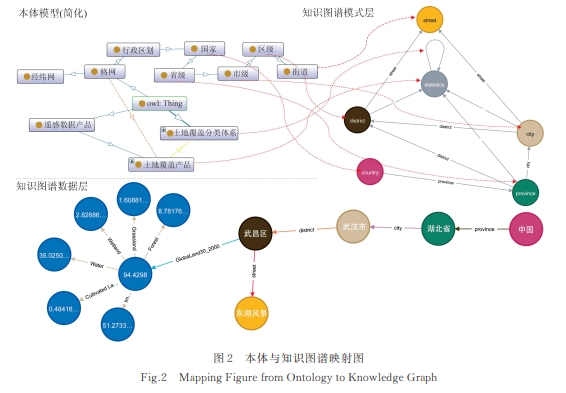

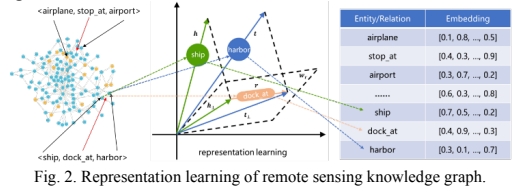

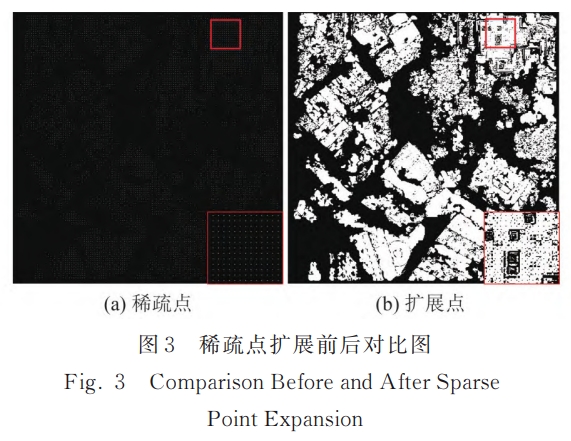

Xiaojian Liu, , Huimin Zou, Fei Wang, Xin Cheng, Wenpin Wu, Xinyi Liu, Yansheng Li. (2023) Multi-source Knowledge Graph Reasoning for Ocean Oil Spill Detection from Satellite SAR Images. In: International Journal of Applied Earth Observation and Geoinformation, 116: 103153.

Abstract: Marine oil spills can cause severe damage to the marine environment and biological resources. Using satellite remote sensing technology is one of the best ways to monitor the sea surface in near real-time to obtain oil spill information. The existing methods in the literature either use deep convolutional neural networks in synthetic aperture radar (SAR) images to directly identify oil spills or use traditional methods based on artificial features sequentially to distinguish oil spills from sea surface. However, both approaches currently only use image information and ignore some valuable auxiliary information, such as marine weather conditions, distances from oil spill candidates to oil spill sources, etc. In this study, we proposed a novel method to help detect marine oil spills by constructing a multi-source knowledge graph, which was the first one specifically designed for oil spill detection in the remote sensing field. Our method can rationally organize and utilize various oil spill-related information obtained from multiple data sources, such as remote sensing images, vectors, texts, and atmosphere-ocean model data, which can be stored in a graph database for user-friendly query and management. In order to identify oil spills more effectively, we also proposed 13 new dark spot features and then used a feature selection technique to create a feature subset that was favorable to oil spill detection. Furthermore, we proposed a knowledge graph-based oil spill reasoning method that combines rule inference and graph neural network technology, which pre-inferred and eliminated most non-oil spills using statistical rules to alleviate the problem of imbalanced data categories (oil slick and non-oil slick). Entity recognition is ultimately performed on the remaining oil spill candidates using a graph neural network algorithm. To verify the effectiveness of our knowledge graph approach, we collected 35 large SAR images to construct a new dataset, for which the training set contained 110 oil slicks and 66264 non-oil slicks from 18 SAR images, the validation set contained 35 oil slicks and 69005 non-oil slicks from 10 SAR images, and the testing set contained 36 oil slicks and 36281 non-oil slicks from the remaining 7 SAR images. The results showed that some traditional oil spill detection methods and deep learning models failed when the dataset suffered a severe imbalance, while our proposed method identified oil spills with a sensitivity of 0.8428, specificity of 0.9985, and precision of 0.2781 under those same conditions. The knowledge graph method we proposed using multi-source data can not only help solve the problem of information island in oil spill detection, but serve as a guide for construction of remote sensing knowledge graphs in many other applications as well. The dataset gathered has been made freely available online (https://pan.baidu.com/s/1DDaqIljhjSMEUHyaATDIYA?pwd=qmt6). [full text] [link]

-

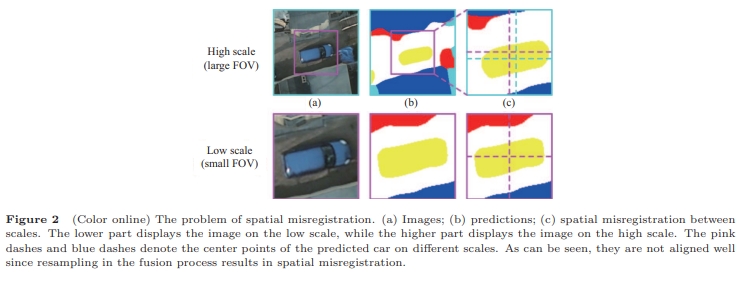

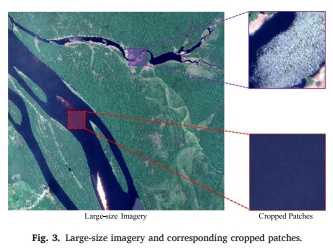

Zhiyong Peng, Jun Wu, , Xianhua Lin. (2023) MFVNet: A Deep Adaptive Fusion Network with Multiple Field-of-Views for Remote Sensing Image Semantic Segmentation. In: Science China Information Science 2023, 66: 140305.

Abstract: In recent years, the remote sensing image (RSI) semantic segmentation attracts increasing research interest due to its wide application. RSIs are difficult to be processed holistically on current GPU cards on account of their large field-of-views (FOVs). However, the prevailing practices such as downsampling and cropping will inevitably decrease the quality of semantic segmentation. To address this conflict, this paper proposes a new deep adaptive fusion network with multiple FOVs (MFVNet), which is specially designed for RSI semantic segmentation. Different from existing methods, MFVNet takes into consideration the differences among multiple FOVs. By pyramid sampling the RSI, we first obtain images on different scales with multiple FOVs. Images on the high scale with a large FOV can capture larger spatial contexts and complete object contours, while images on the low scale with a small FOV can keep the higher spatial resolution and more detailed information. Then scale-specific models are chosen to make the best predictions for all scales. Next, the output feature maps and score maps are aligned through the scale alignment module to overcome spatial misregistration among scales. Finally, the aligned score maps are fused with the help of adaptive weight maps generated by the adaptive fusion module, producing the fused prediction. The performance of MFVNet surpasses the previous state-of-the-art semantic segmentation models on three typical RSI datasets, demonstrating the effectiveness of the proposed MFVNet. [full text] [link]

-

Shunping Ji, Chang Zeng, , Yulin Duan, (2023) An evaluation of conventional and deep learning-based image-matching methods on diverse datasets. In: Photogrammetric Record, 2023, 38(182), 137-159.

Abstract: Image matching plays an important role in photogrammetry, computer vision and remote sensing. Modern deep learning-based methods have been proposed for image matching; however, whether they will surpass and take the place of the conventional handcrafted methods in the remote sensing field still remains unclear. A comprehensive evaluation on stereo remote sensing images is also lacking. This paper comprehensively evaluates the performance of conventional and deep learning-based image-matching methods by dividing the matching process into feature point extraction, description and similarity measure on various datasets, including images captured from close-range indoor and outdoor scenarios, unmanned aerial vehicles (UAVs) and satellite platforms. Different combinations of the three steps are evaluated. The experimental results reveal that, first, the performance of the different combinations varies between individual datasets, and it is difficult to determine the best combination. Second, by using more comprehensive indicators on all of the datasets, that is, the average rank and absolute rank, the combination of scale-invariant feature transform (SIFT), ContextDesc and the nearest neighbour distance ratio (NNDR), and also the original SIFT, achieve the best results, and are recommended for use in remote sensing. Third, the deep learning-based Sub-SuperPoint extractor obtains a good performance, and is second only to SIFT. The learning based ContextDesc descriptor is as effective as the SIFT descriptor, and the learning based SuperGlue matcher is not as stable as NNDR, but leads to a few top-performing combinations. Finally, the handcrafted methods are generally faster than the deep learning-based methods, but the efficiency of the latter is acceptable. We conclude that although a full deep learning-based method/combination has not yet beaten the conventional methods, there is still much room for improvement with the deep learning-based methods because large-scale aerial and satellite training datasets remain to be constructed, and specific methods for remote sensing images remain to be developed. The performance of the different combinations of feature extractor, descriptor and similarity measure varies between individual datasets. The combination of SIFT, ContextDesc and NNDR, and also the original SIFT, achieve the best results when using more comprehensive indicators on all the datasets. For extractor, the learning based Sub-SuperPoint is second only to SIFT; for descriptor, learning-based ContextDesc is as effective as the SIFT descriptor; and for matcher, learning-based SuperGlue is not as stable as NNDR. [full text] [link]

-

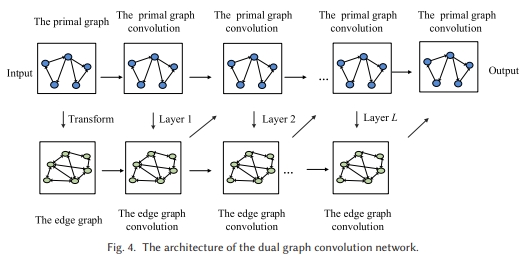

Ling Chen, Xing Tang, Weiqi Chen, Yuntao Qian, Yansheng Li, . (2022) DACHA: A Dual Graph Convolution Based Temporal Knowledge Graph Representation Learning Method Using Historical Relation. In: ACM Transactions on Knowledge Discovery from Data. 16(3): June 2022, pp 1-18.

Abstract: Temporal knowledge graph (TKG) representation learning embeds relations and entities into a continuous low-dimensional vector space by incorporating temporal information. Latest studies mainly aim at learning entity representations by modeling entity interactions from the neighbor structure of the graph. However, the interactions of relations from the neighbor structure of the graph are neglected, which are also of significance for learning informative representations. In addition, there still lacks an effective historical relation encoder to model the multi-range temporal dependencies. In this article, we propose a dual graph convolution network based TKG representation learning method using historical relations (DACHA). Specifically, we first construct the primal graph according to historical relations, as well as the edge graph by regarding historical relations as nodes. Then, we employ the dual graph convolution network to capture the interactions of both entities and historical relations from the neighbor structure of the graph. In addition, the temporal self-attentive historical relation encoder is proposed to explicitly model both local and global temporal dependencies. Extensive experiments on two event based TKG datasets demonstrate that DACHA achieves the state-of-the-art results. [full text] [link]

-

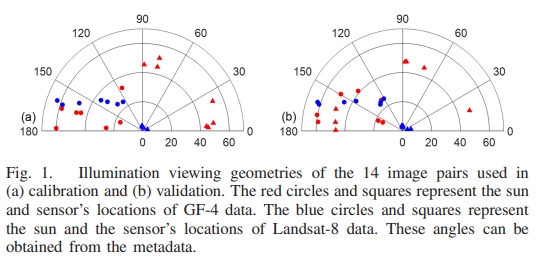

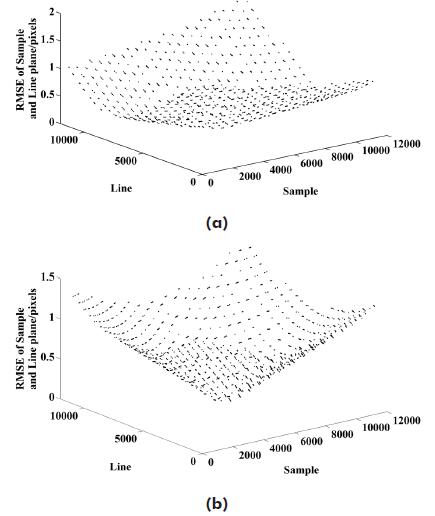

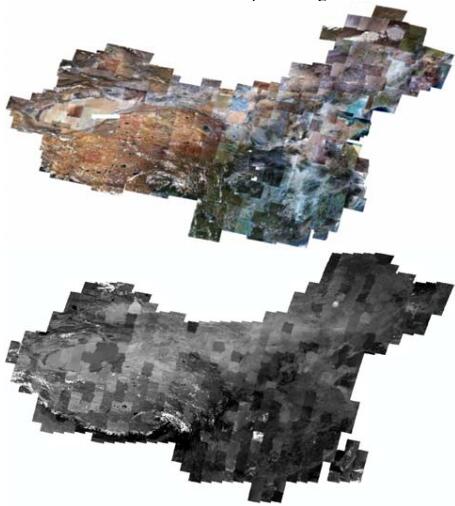

Jun Lu, Tao He, Shunlin Liang, . (2022) An Automatic Radiometric Cross-Calibration Method for Wide-Angle Medium-Resolution Multispectral Satellite Sensor Using Landsat Data. In:IEEE Transactions on Geoscience and Remote Sensing, 2022, 60.

Abstract: Radiometric calibration of the medium-resolution satellite data is critical for monitoring and quantifying changes in the Earth's environment and resources. Many medium-resolution satellite sensors have irregular revisits and, sometimes, have a large difference in illumination viewing geometry compared with a reference sensor, posing a great challenge for routine cross-calibration practices. To overcome these issues, this study proposed a cross-calibration method to calibrate medium-resolution multispectral data. The Chinese Gaofen-4 (GF-4) panchromatic and multispectral sensor (PMS) data with large viewing angles were used as the test data, and Landsat-8 operational land imager (OLI) data were used as the reference data. A bidirectional reflectance distribution function (BRDF) correction method was proposed to eliminate the effects of differences in illumination viewing geometry between GF-4 and Landsat-8. The validation using concurrent image shows that the mean relative error (MRE) of cross calibration is less than 6.65%. Validation using ground measurements shows that our calibration results have an improvement of around 14.8% compared with the official released calibration coefficients. The time series cross calibration reveals that, without the requirements of simultaneous nadir observations (SNOs), our calibration activities can be carried out more often in practice. Gradual and continuous radiometric sensor degradation is identified with the monthly updated calibration coefficients, demonstrating the reliability and importance of the timely cross calibration. Besides, the cross-calibration approach does not rely on any specific calibration site, and the difference in illumination viewing geometry can be well considered. Thus, it can be easily adapted and applied to other optical satellite data. [full text] [link]

-

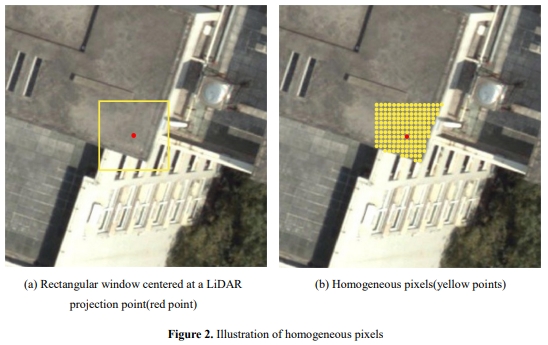

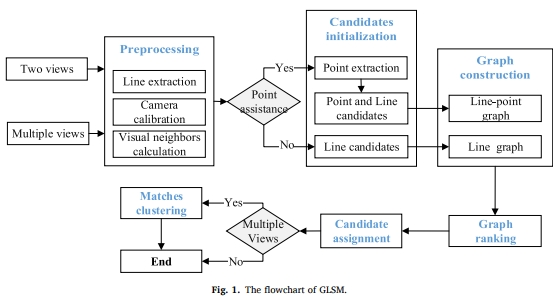

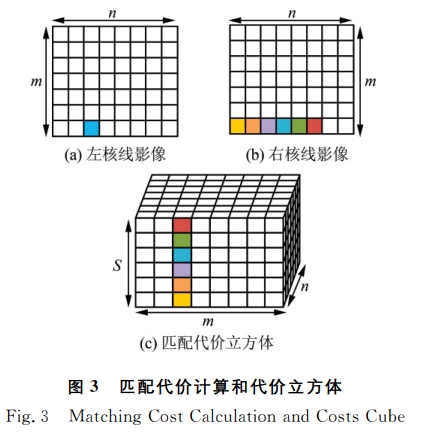

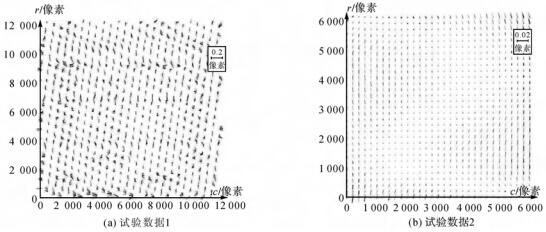

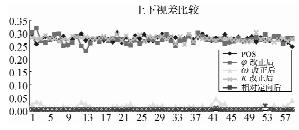

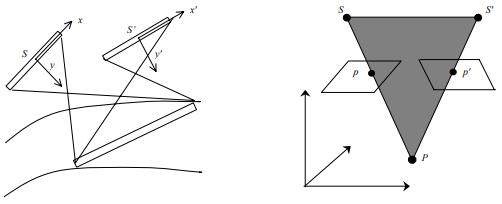

, Siyuan Zou, Xinyi Liu, Xu Huang, Yi Wan, Yongxiang Yao. (2022) LiDAR-Guided Stereo Matching with a Spatial Consistency Constraint. In:ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 183, 164-177.

Abstract: The complementary fusion of light detection and ranging (LiDAR) data and image data is a promising but challenging task for generating high-precision and high-density point clouds. This study proposes an innovative LiDAR-guided stereo matching approach called LiDAR-guided stereo matching (LGSM), which considers the spatial consistency represented by continuous disparity or depth changes in the homogeneous region of an image. The LGSM first detects the homogeneous pixels of each LiDAR projection point based on their color or intensity similarity. Next, we propose a riverbed enhancement function to optimize the cost volume of the LiDAR projection points and their homogeneous pixels to improve the matching robustness. Our formulation expands the constraint scopes of sparse LiDAR projection points with the guidance of image information to optimize the cost volume of pixels as much as possible. We applied LGSM to semi-global matching and AD-Census on both simulated and real datasets. When the percentage of LiDAR points in the simulated datasets was 0.16%, the matching accuracy of our method achieved a subpixel level, while that of the original stereo matching algorithm was 3.4 pixels. The experimental results show that LGSM is suitable for indoor, street, aerial, and satellite image datasets and provides good transferability across semi-global matching and AD-Census. Furthermore, the qualitative and quantitative evaluations demonstrate that LGSM is superior to two state-of-the-art optimizing cost volume methods, especially in reducing mismatches in difficult matching areas and refining the boundaries of objects. [full text] [link]

-

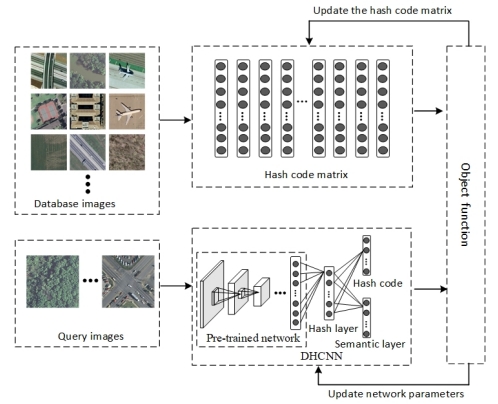

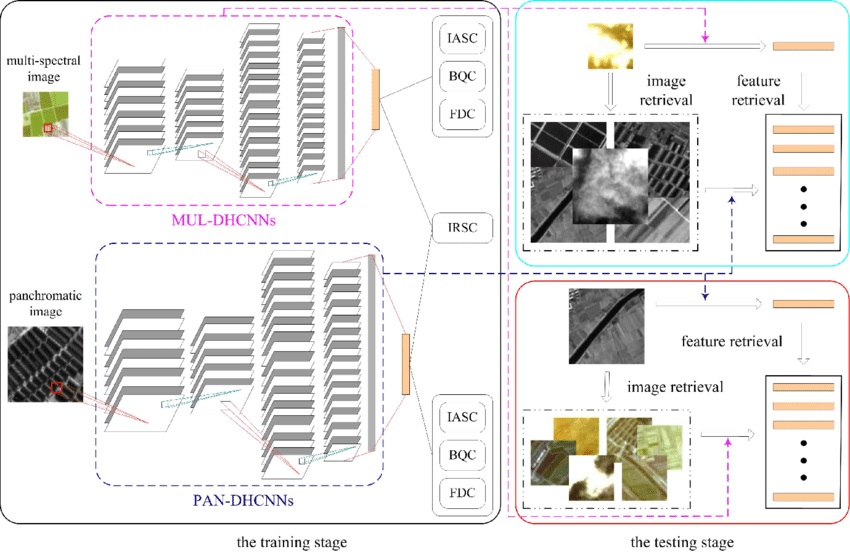

Weiwei Song, Zhi Gao, Renwei Dian, Pedram Ghamisi, , Jon Atil Benediktsson. (2022) Asymmetric Hash Code Learning for Remote Sensing Image Retrieval. In:IEEE Transactions on Geoscience and Remote Sensing, 2022, 60, 5617514.

Abstract: Remote sensing image retrieval (RSIR), aiming at searching for a set of similar items to a given query image, is a very important task in remote sensing applications. Deep hashing learning as the current mainstream method has achieved satisfactory retrieval performance. On one hand, various deep neural networks are used to extract semantic features of remote sensing images. On the other hand, the hashing techniques are subsequently adopted to map the high-dimensional deep features to the low-dimensional binary codes. This kind of method attempts to learn one hash function for both the query and database samples in a symmetric way. However, with the number of database samples increasing, it is typically time-consuming to generate the hash codes of large-scale database images. In this article, we propose a novel deep hashing method, named asymmetric hash code learning (AHCL), for RSIR. The proposed AHCL generates the hash codes of query and database images in an asymmetric way. In more detail, the hash codes of query images are obtained by binarizing the output of the network, while the hash codes of database images are directly learned by solving the designed objective function. In addition, we combine the semantic information of each image and the similarity information of pairs of images as supervised information to train a deep hashing network, which improves the representation ability of deep features and hash codes. The experimental results on three public datasets demonstrate that the proposed method outperforms symmetric methods in terms of retrieval accuracy and efficiency. The source code is available at https://github.com/weiweisong415/Demo_AHCL_for_TGRS2022. [full text] [link]

-

Yongxiang Yao, , Yi Wan, Xinyi Liu, Xiaohu Yan, Jiayuan Li. (2022) Multi-Modal Remote Sensing Image Matching Considering Co-Occurrence Filter. In:IEEE Transactions on Image Processing, 2022, 31, 2584-2597.

Abstract: Traditional image feature matching methods cannot obtain satisfactory results for multi-modal remote sensing images (MRSIs) in most cases because different imaging mechanisms bring significant nonlinear radiation distortion differences (NRD) and complicated geometric distortion. The key to MRSI matching is trying to weakening or eliminating the NRD and extract more edge features. This paper introduces a new robust MRSI matching method based on co-occurrence filter (CoF) space matching (CoFSM). Our algorithm has three steps: (1) a new co-occurrence scale space based on CoF is constructed, and the feature points in the new scale space are extracted by the optimized image gradient; (2) the gradient location and orientation histogram algorithm is used to construct a 152-dimensional log-polar descriptor, which makes the multi-modal image description more robust; and (3) a position-optimized Euclidean distance function is established, which is used to calculate the displacement error of the feature points in the horizontal and vertical directions to optimize the matching distance function. The optimization results then are rematched, and the outliers are eliminated using a fast sample consensus algorithm. We performed comparison experiments on our CoFSM method with the scale-invariant feature transform (SIFT), upright-SIFT, PSO-SIFT, and radiation-variation insensitive feature transform (RIFT) methods using a multi-modal image dataset. The algorithms of each method were comprehensively evaluated both qualitatively and quantitatively. Our experimental results show that our proposed CoFSM method can obtain satisfactory results both in the number of corresponding points and the accuracy of its root mean square error. The average number of obtained matches is namely 489.52 of CoFSM, and 412.52 of RIFT. As mentioned earlier, the matching effect of the proposed method was significantly greater than the three state-of-art methods. Our proposed CoFSM method achieved good effectiveness and robustness. Executable programs of CoFSM and MRSI datasets are published: https://skyearth.org/publication/project/CoFSM/. [full text] [link]

-

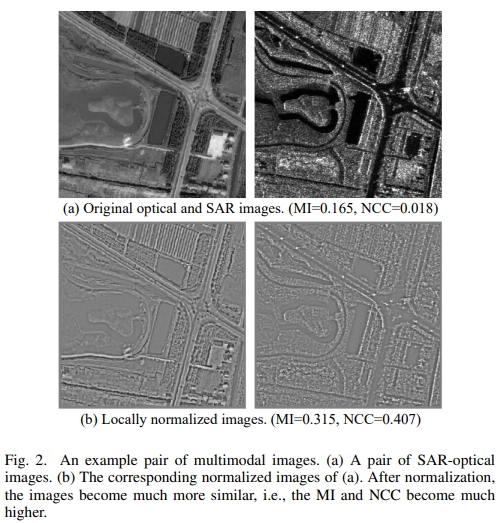

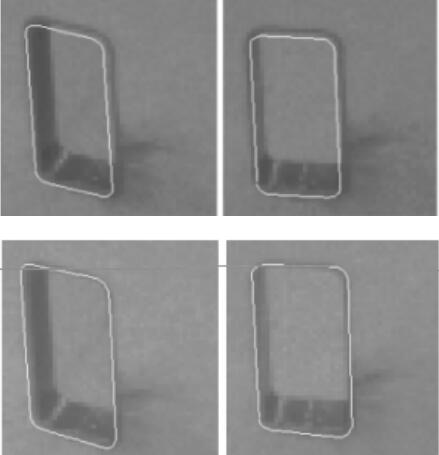

Jiayuan Li, Wangyi Xu, Pengcheng Shi, , Qingwu Hu. (2022) LNIFT: Locally Normalized Image for Rotation Invariant Multimodal Feature Matching. In:IEEE Transactions on Geoscience and Remote Sensing, 2022, 60, 5621314.

Abstract: Severe nonlinear radiation distortion (NRD) is the bottleneck problem of multimodal image matching. Although many efforts have been made in the past few years, such as the radiation-variation insensitive feature transform (RIFT) and the histogram of orientated phase congruency (HOPC), almost all these methods are based on frequency-domain information that suffers from high computational overhead and memory footprint. In this article, we propose a simple but very effective multimodal feature matching algorithm in the spatial domain, called locally normalized image feature transform (LNIFT). We first propose a local normalization filter to convert original images into normalized images for feature detection and description, which largely reduces the NRD between multimodal images. We demonstrate that normalized matching pairs have a much larger correlation coefficient than the original ones. We then detect oriented FAST and rotated brief (ORB) keypoints on the normalized images and use an adaptive nonmaximal suppression (ANMS) strategy to improve the distribution of keypoints. We also describe keypoints on the normalized images based on a histogram of oriented gradient (HOG), such as a descriptor. Our LNIFT achieves rotation invariance the same as ORB without any additional computational overhead. Thus, LNIFT can be performed in near real-time on images with 1024 times 1024 pixels (only costs 0.32 s with 2500 keypoints). Four multimodal image datasets with a total of 4000 matching pairs are used for comprehensive evaluations, including synthetic aperture radar (SAR)-optical, infrared-optical, and depth-optical datasets. Experimental results show that LNIFT is far superior to RIFT in terms of efficiency (0.49 s versus 47.8 s on a 1024 times 1024 image), success rate (99.9% versus 79.85%), and number of correct matches (309 versus 119). The source code and datasets will be publicly available at https://ljy-rs.github.io/web. [full text] [link]

-

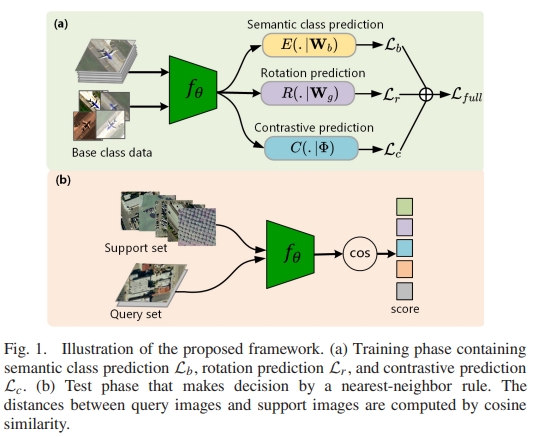

Hong Ji, Zhi Gao, , Yu Wan, Can Li, Tiancan Mei. (2022) Few-Shot Scene Classification of Optical Remote Sensing Images Leveraging Calibrated Pretext Tasks. In:IEEE Transactions on Geoscience and Remote Sensing, 2022, 60, 5625513.

Abstract: Small data hold big artificial intelligence (AI) potential. As one of the promising small data AI approaches, few-shot learning has the goal to learn a model efficiently that can recognize novel classes with extremely limited training samples. Therefore, it is critical to accumulate useful prior knowledge obtained from large-scale base class dataset. To realize few-shot scene classification of optical remote sensing images, we start from a baseline model that trains all base classes using a standard cross-entropy loss leveraging two auxiliary objectives to capture intrinsical characteristics across the semantic classes. Specifically, rotation prediction learns to recognize the 2-D rotation of an input to guide the learning of class-transferable knowledge, and contrastive learning aims to pull together the positive pairs while pushing apart the negative pairs to promote intraclass consistency and interclass inconsistency. We jointly optimize two such pretext tasks and semantic class prediction task in an end-to-end manner. To further overcome the overfitting issue, we introduce a regularization technique, adversarial model perturbation, to calibrate the pretext tasks so as to enhance the generalization ability. Extensive experiments on public remote sensing benchmarks including Northwestern Polytechnical University (NWPU)-RESISC45, aerial image dataset (AID), and Wuhan University (WHU)-remote sensing (RS)-19 demonstrate that our method works effectively and achieves best performance that significantly outperforms many state-of-the-art approaches. [full text] [link]

-

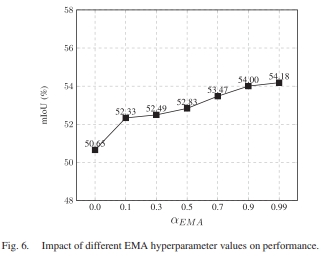

Bin Zhang, , Yansheng Li, Yi Wan, Haoyu Guo, Zhi Zheng, Kun Yang. (2022) Semi-Supervised Deep Learning via Transformation Consistency Regularization for Remote Sensing Image Semantic Segmentation. In:IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2022.

Abstract: Deep convolutional neural networks (CNNs) have gotten a lot of press in the last several years, especially in domains like computer vision (CV) and remote sensing (RS). However, achieving superior performance with deep networks highly depends on a massive amount of accurately labeled training samples. In real-world applications, gathering a large number of labeled samples is time-consuming and labor-intensive, especially for pixel-level data annotation. This dearth of labels in land-cover classification is especially pressing in the RS domain because high-precision, high-quality labeled samples are extremely difficult to acquire, but unlabeled data is readily available. In this study, we offer a new semi-supervised deep semantic labeling framework for semantic segmentation of high-resolution RS images to take advantage of the limited amount of labeled examples and numerous unlabeled samples. Our model uses transformation consistency regularization (TCR) to encourage consistent network predictions under different random transformations or perturbations. We try three different transforms to compute the consistency loss and analyze their performance. Then, we present a deep semi-supervised semantic labeling technique by using a hybrid transformation consistency regularization (HTCR). A weighted sum of losses, which contains a supervised term computed on labeled samples and an unsupervised regularization term computed on unlabeled data, may be used to update the network parameters in our technique. Our comprehensive experiments on two RS datasets confirmed that the suggested approach utilized latent information from unlabeled samples to obtain more precise predictions and outperformed existing semi-supervised algorithms in terms of performance. Our experiments further demonstrated that our semi-supervised semantic labeling strategy has the potential to partially tackle the problem of limited labeled samples for high-resolution RS image land-cover segmentation. [full text] [link]

-

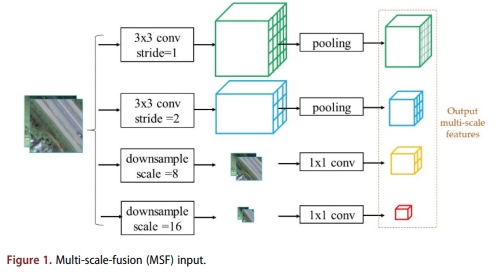

Zhi Zheng, Yi Wan,, Kun Yang, Rang Xiao, Chao Lin, Qiong Wu, Daifeng Peng. (2022) EMS-CDNet: an Efficient Multi-Scale-Fusion Change Detection Network for very High-resolution Remote Sensing Images. In:International Journal of Remote Sensing, 2022, 43(14), 5252-5279.

Abstract: Remote sensing image change detection (RSICD) is an essential measure for monitoring the earth's surface changes. In recent years, the explosive growth of very high-resolution (VHR) satellite sensors and the booming innovations in deep learning technology have significantly boosted RSICD development. However, most of the current RSICD models focus on locating accurate change areas while ignoring the efficiency of their method, which limits the practical application of RSICD models, especially for large-scale and emergency RSICD tasks. In this paper, we propose an Efficient Multi-scale-fusion Change Detection Network (EMS-CDNet) for bi-temporal RSICD tasks. Our EMS-CDNet pays more attention to the model's inference speed and the accuracy-efficiency trade-off rather than only pursuing detection accuracy. We designed a multi-scale fusion module for EMS-CDNet, which adopts multi-scale and multi-branch operations to extract multi-scale features simultaneously and aggregate features at different feature levels. In addition to EMS-CDNet's ability to achieve sufficient feature extraction, the multi-scale image input within the designed module alleviates the influence of image registration errors in practical applications, thereby strengthening EMS-CDNet's value for practical RSICD tasks. We also integrated a novel partition unit in EMS-CDNet to lighten the model while maintaining the detection ability of small targets, thus shortening its processing time without a severe accuracy decrease. We conducted experiments on two state-of-the-art (SOTA) public RSICD datasets and our own collected dataset. The public datasets were utilized to comparatively measure the overall accuracy and efficiency measurement of EMS-CDNet, and the dataset of images we collected was used to observe EMS-CDNet's performance under the influence of image registration errors. Our experimental results show that EMS-CDNet achieved a better accuracy-efficiency trade-off than the SOTA public datasets methods. For example, EMS-CDNet reduced the inference time by about 33% while maintaining identical detection accuracy to CLNet (the optimal method among the comparison methods). Furthermore, EMS-CDNet achieved higher accuracy on our collected dataset, with an F1 of 74% and mIoU of 0.806, demonstrating its robustness to image registration errors and showing its value for practical RSICD applications. [full text] [link]

-

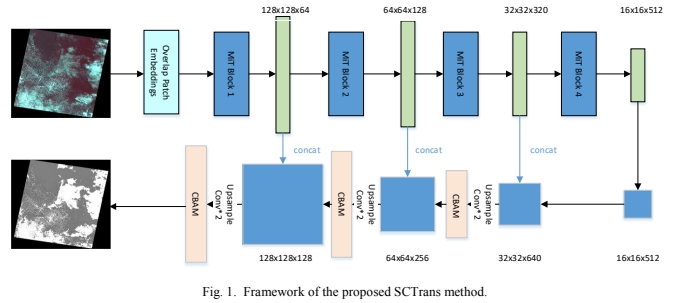

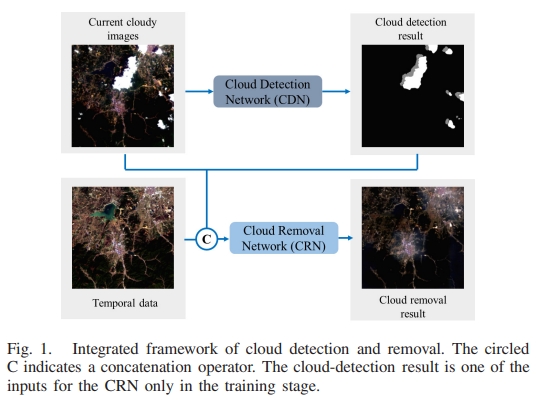

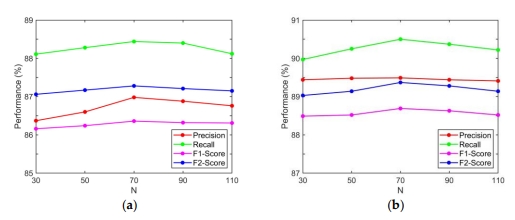

Wenke Jiao, , Bin Zhang, Yi Wan. (2022) SCTRANS: A Transformer Network Based on the Spatial and Channel Attention for Cloud Detection. In:International Geoscience and Remote Sensing Symposium (IGARSS), 2022, 2022-July, 615-618.

Abstract: Cloud detection is an important preprocessing step for remote sensing image processing and analysis. The current deep-learning-based cloud detection methods are mostly based on Convolutional Neural Network (CNN) which pay more attention to local information. To make more use of the global information, in this article, we propose a transformer-based cloud detection method (SCTrans) based on the spatial and channel attention mechanism. The experiment results show that when using only three-band images on the Landsat7 dataset, the mIoU of the validation set reaches 85.92% and the mIoU of the test set reaches 87.86%. The experimental results show that the proposed network has a higher mIoU and F1 score than Fmask and other networks. [full text] [link]

-

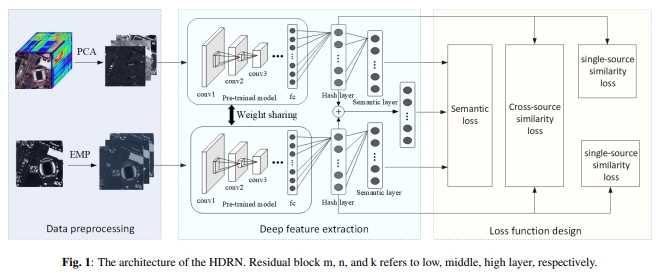

Weiwei Song, Zhi Gao, . (2022) Discriminative Feature Extraction and Fusion for Classification of Hyperspectral and Lidar Data. In:International Geoscience and Remote Sensing Symposium (IGARSS), 2022, 2022-July, 2271-2274.

Abstract: Multisource remote sensing data provide the abundant and complementary information for land cover classification. In this paper, we propose a deep hashing-based feature extraction and fusion framework for joint classification of hyper-spectral and LiDAR data. Firstly, HSIs and LiDAR data are fed into a two-stream network to extract deep features after data preprocessing. Then, we adopt hashing technique to constrain single-source and cross-source similarities, i.e., samples with same classes should have small feature distance and samples with different classes should have large feature distance. Furthermore, a feature-level fusion strategy is exploited to fuse the two kind of multisource information. Finally, we design an object function to consider the similarity information between sample pairs and semantic information of each sample, which can deliver the discriminative features for classification. The experiments on Houston data demonstrate the effectiveness of the proposed method over some competitive approaches. [full text] [link]

-

Bin Zhang, Yi Wan, , Yansheng Li. (2022) JSH-Net: Joint Semantic Segmentation and Height Estimation using Deep Convolutional Networks from Single High-resolution Remote Sensing Imagery. In:International Journal of Remote Sensing, 2022, 43(17), 6307-6332.

Abstract: Semantic segmentation for high-resolution remote sensing imagery is a pivotal component of land use and land cover categorization, and height estimation is essential for rebuilding the 3D information of an image. Because of the higher intra-class variation and smaller inter-class dissimilarity, these two challenging tasks are generally treated separately. This paper proposes a fully convolutional network that can tackle these problems simultaneously by estimating the land-cover categories and height values of pixels from a single aerial image. To handle these tasks, we develop a multi-task learning architecture (JSH-Net) that employs a shared feature representation and exploits their potential consistency across tasks, resulting in robust features and better prediction accuracy. Specifically, we propose a novel skip connection module that aggregates the contexts from the encoder part to the decoder part, bridging the semantic gap between them. In addition, we propose a progressive refinement strategy to recover detailed information about the objects. Moreover, we also proposed a height estimation branch on the head of the model to utilize shared features. The experiments we conducted on ISPRS 2D Labelling dataset verified that our network provided precise results of semantic segmentation and height estimation from two output branches and outperformed other state-of-the-art approaches. [full text] [link]

-

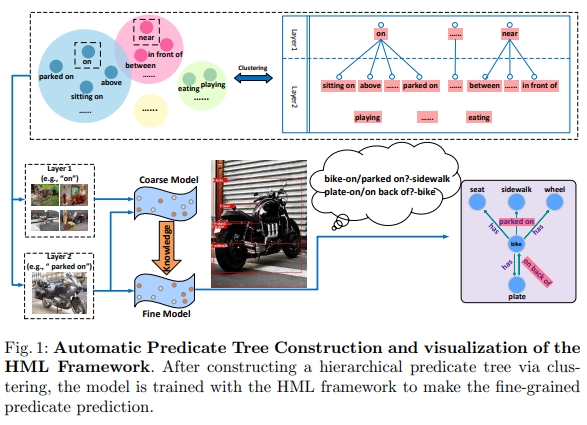

Youming Deng, Yansheng Li, , Xiang Xiang, Jian Wang, Jingdong Chen, Jiayi Ma. (2022) Hierarchical Memory Learning for Fine-Grained Scene Graph Generation. In:Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2022, 13687 LNCS, 266-283.

Abstract: Regarding Scene Graph Generation (SGG), coarse and fine predicates mix in the dataset due to the crowd-sourced labeling, and the long-tail problem is also pronounced. Given this tricky situation, many existing SGG methods treat the predicates equally and learn the model under the supervision of mixed-granularity predicates in one stage, leading to relatively coarse predictions. In order to alleviate the impact of the suboptimum mixed-granularity annotation and long-tail effect problems, this paper proposes a novel Hierarchical Memory Learning (HML) framework to learn the model from simple to complex, which is similar to the human beings' hierarchical memory learning process. After the autonomous partition of coarse and fine predicates, the model is first trained on the coarse predicates and then learns the fine predicates. In order to realize this hierarchical learning pattern, this paper, for the first time, formulates the HML framework using the new Concept Reconstruction (CR) and Model Reconstruction (MR) constraints. It is worth noticing that the HML framework can be taken as one general optimization strategy to improve various SGG models, and significant improvement can be achieved on the SGG benchmark. [full text] [link]

-

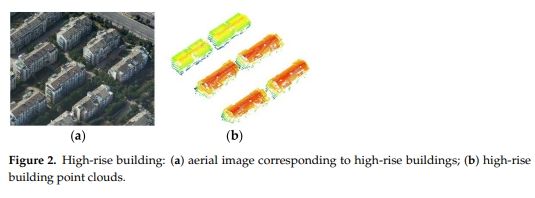

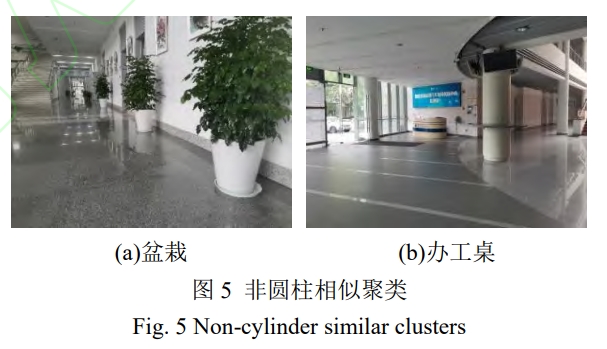

Wangshan Yang, Xinyi Liu, , Yi Wan, Zheng Ji. (2022) Object Based Building Instance Segmentation from Airborne LiDAR Point Clouds. In:International Journal of Remote Sensing, 2022, 43(18), 6783-6808.

Abstract: DBuilding instance segmentation is of very importance to parallel reconstruction, management and analysis of building instance. Previous studies of building instance segmentation mainly focused on the building scenes where the building spacing is much larger than the point spacing, while the accuracy of building instance segmentation for complex buildings scenes and the building point clouds where the space between buildings is similar with point spacing is low. To improve the accuracy of building instance segmentation for complex building scenes, we propose a novel object-based building instance segmentation (OBBIS) method from airborne light detection and ranging (LiDAR) point clouds. Firstly, our proposed method divides building point clouds into objects, and then the objects are classified according to the characteristics of building roof plane objects, roof accessory objects and building facade objects. Secondly, we use node to represent object and then a fix-size feature vector is inferred for each node. Thirdly, vertical cylinder neighbour node graph is constructed. Finally, the energy function is constructed according to the relationship between the nodes, and then the objects are merged according to the energy minimum (that is, objects are merged with a minimum energy to obtain the building instances). Comprehensive experiments on benchmark datasets demonstrate that the proposed OBBIS method performs better than eight state-of-the-art building instance segmentation methods. [full text] [link]

-

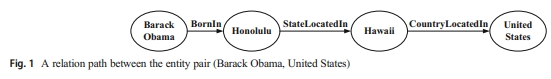

Ling Chen, Jun Cui,Xing Tang, Yuntao Qian, Yansheng Li, . (2022) RLPath: A Knowledge Graph Link Prediction Method using Reinforcement Learning based Attentive Relation Path Searching and Representation Learning. In:Applied Intelligence, 2022, 52(4), 4715-4726.

Abstract: Due to containing rich patterns between entities, relation paths have been widely used in knowledge graph link prediction. The state-of-the-art link prediction methods considering relation paths obtain relation paths by reinforcement learning with an untrainable reward setting, and realize link prediction by path-ranking algorithm (PRA), which ignores information in entities. In this paper, we propose a new link prediction method RLPath to employ information in both relation paths and entities, which alternately trains a reinforcement learning model with a trainable reward setting to search high-quality relation paths, and a translation-based model to realize link prediction. Simultaneously, we propose a novel reward setting for the reinforcement learning model, which shares the parameters with the attention of the translation-based model, so that these parameters can not only measure the contributions of relation paths, but also guide agents to search relation paths that have high contributions for link prediction, forming mutual promotion. In experiments, we compare RLPath with the state-of-the-art link prediction methods. The results show that RLPath has competitive performance. [full text] [link]

-

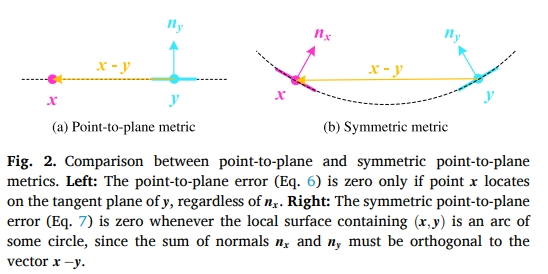

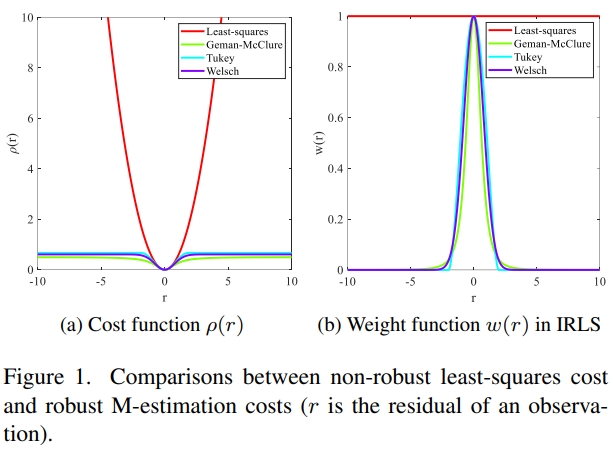

Jiayuan Li, Qingwu Hu, , Mingyao Ai. (2022) Robust Symmetric Iterative Closest Point. In:ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 185, 219-231.

Abstract: Point cloud registration (PCR) is an important technique of 3D vision, which has been widely applied in many areas such as robotics and photogrammetry. The iterative closest point (ICP) is a de facto standard for PCR. However, it mainly suffers from two drawbacks: small convergence basin and the sensitivity to outliers and partial overlaps. In this paper, we propose a robust symmetric ICP (RSICP) to tackle these drawbacks. First, we present a new symmetric point-to-plane distance metric whose functional zero-set is a set of locally-second-order surfaces. It has a wider convergence basin and higher convergence speed than the point-to-point metric, point-to-plane metric, and even original symmetric metric. Second, we introduce an adaptive robust loss to construct our robust symmetric metric. This robust loss bridges the gap between the non-robust ℓ2 cost and robust M-estimates. In the optimization, we gradually improve the degrees of robustness via the decay of a robustness control parameter. This loss has a high “breakdown” point or low computational overhead compared with recent work (e.g., Sparse ICP and Robust ICP). We also present a simple but effective linearization for the alignment function based on Rodrigues rotation parameterization with the small incremental rotation assumption. Extensive experiments on challenging datasets with noise, outliers or partial overlaps show that the proposed algorithm significantly outperforms Sparse ICP and Robust ICP in terms of both accuracy and efficiency. Our source code will be publicly available in https://ljy-rs.github.io/web. [full text] [link]

-

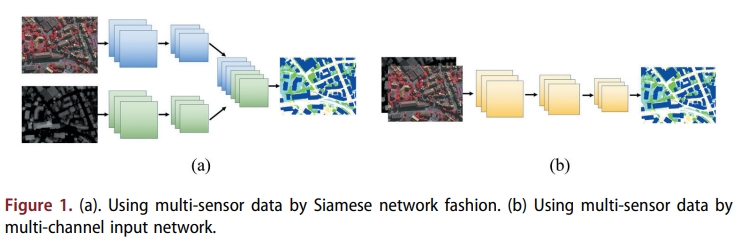

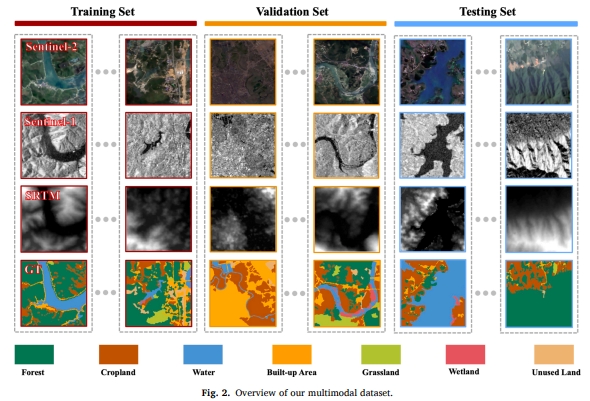

Yansheng Li, Yuhan Zhou, , Liheng Zhang, Jian Wang, Jingdong Chen. (2022) DKDFN: Domain Knowledge-Guided Deep Collaborative Fusion Network for Multimodal Unitemporal Remote Sensing Land Cover Classification. In:ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 186, 170-189.

Abstract: Land use and land cover maps provide fundamental information that has been used in different types of studies, ranging from public health to carbon cycling. However, the existing remote sensing image classification methods thus far suffer from the insufficient usage of multiple modalities, underconsideration of prior domain knowledge, and poor performance on minority classes. To alleviate these problems, we propose a novel domain knowledge-guided deep collaborative fusion network (DKDFN) with performance boosting for minority categories for land cover classification. More specifically, the DKDFN adopts a multihead encoder and a multibranch decoder structure. The architecture of the encoder probablizes sufficient mining of complementary information from multiple modalities, which are Sentinel-2, Sentinel-1, and SRTM Digital Elevation Data (SRTM) in our case. The multibranch decoder enables land cover classification in a multitask learning setup, performing semantic segmentation and reconstructing multimodal remote sensing indices, which are selected as representatives of domain knowledge. This design incorporates domain knowledge in an effective end-to-end manner. The training stage of our DKDFN is supervised by our proposed asymmetry loss function (ALF), which boosts performance on nearly all categories, especially the categories with a low frequency of occurrence. Ablation studies of the network suggest that our design logic is worth testing in any network with an encoder-decoder structure. The study is conducted in Hunan, China and is verified using a self-labeled multimodal unitemporal remote sensing image dataset. The comparative experiments between DKDFN and 6 state-of-the-art models (U-Net, SegNet, PSPNet, DeepLab, HRNet, MP-ResNet) testify to the superiority of our method and suggest its potential to be applied more widely to map land cover in other geographical areas given the availability of Sentinel-2, Sentinel-1, and SRTM data. The dataset can be downloaded by https://github.com/LauraChow/HunanMultimodalDataset. [full text] [link]

-

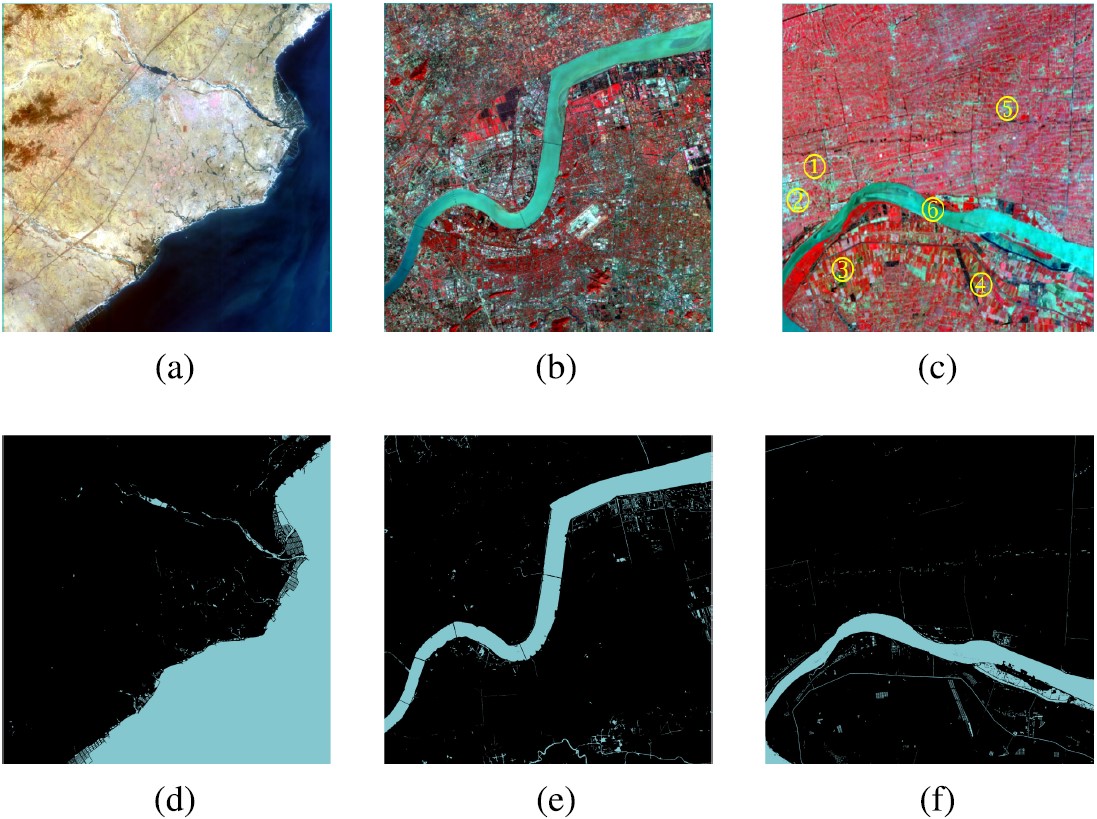

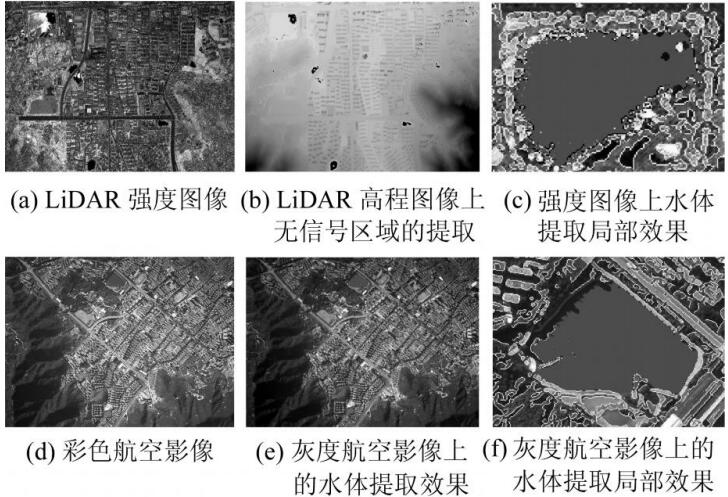

Yansheng Li, Bo Dang, , Zhenhong Du. (2022) Water Body Classification from High-Resolution Optical Remote Sensing Imagery: Achievements and Perspectives. In:ISPRS Journal of Photogrammetry and Remote Sensing, 2022, 187, 306-327.

Abstract: Water body classification from high-resolution optical remote sensing (RS) images, aiming at classifying whether each pixel of the image is water or not, has become a hot issue in the area of RS and has extensive practical applications in a variety of fields. Numerous existing methods have drawn broad attention and achieved remarkable advancements, meanwhile, serious challenges and potential opportunities also exist, which deserves in thinking and discussing deeply. By taking into account the comprehensive survey is still lacking, through the compilation of approximately 200 papers, this paper summarizes and analyzes the achievements, and discusses the perspectives of future research directions. Specifically, we first analyze 5 challenges according to the characteristics of water bodies in high-resolution optical RS imagery, and 5 corresponding significant opportunities combined with advanced deep learning techniques are discussed to respond mentioned challenges. Then, we divide the existing methods into several groups in light of their core ideas and introduce them chiefly. In addition, some practical applications and publicly open benchmarks are listed intuitively. 10 and 9 representative methods are implemented on two widely used datasets to assess their performance, respectively. To facilitate the qualitative and quantitative comparison in the research avenue, the two benchmarks employed in the comparative experiments and links to other relevant datasets and open-source codes will be summarized and released in https://github.com/Jack-bo1220/Benchmarks-for-Water-Body-Extraction-from-HRORS-Imagery. Finally, we discuss a range of promising research directions to provide some references and inspiration for the following research. The studies of our paper, including the existing methods, challenges, opportunities, derived applications, and future research directions, provide a fuller understanding of water body classification from high-resolution optical remote sensing imagery. [full text] [link]

-

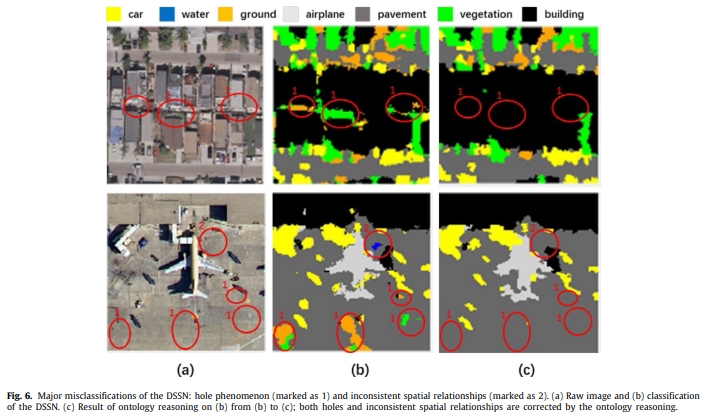

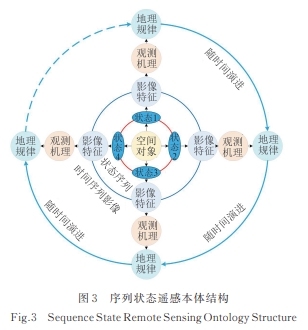

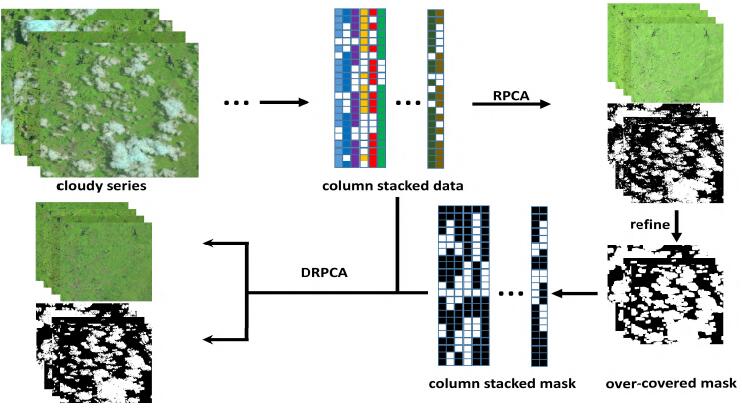

Yansheng Li, Song Ouyang, . (2022) Combining Deep Learning and Ontology Reasoning for Remote Sensing Image Semantic Segmentation. In:Knowledge-Based Systems, 2022, 243, 108469.